A Trillion Hours

[Translations: Japanese]

The web is pretty big. Researchers at Google won’t say how many pages Google indexes, but they recently said that their inspection of the web reveals that it has more than one trillion unique urls. It’s difficult to know what to count as a unique page, because as they explain, some sites such as a web calendar page can generate an infinite number of pages if you click on the “next day” link. The very first public web page was created in August, 1991. So we’ve (the collective YOU) have created 1 trillion pages of content in 6,200 days.

I can assure you that 6,000 days ago, way back in 1991 — or even as recently as ten years ago in 1998 — no one would have believed that we could create 1 trillion web pages so fast. The obvious question then was, who would pay for all this? Who has the time?

To create one trillion pages takes a LOT of time. If we conservatively say that on average each url takes one hour of research, composition, design and programing to produce, then the web entails 1 trillion hours of labor — at the minimum. We could probably safely double that to include the other trillion of web pages that have disappeared in the last 15 years — but let’s not.

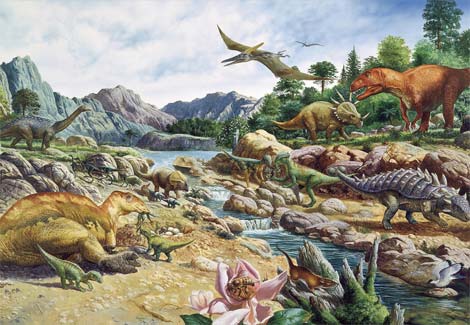

One trillion hours equals 114 million years. If there was only one person working on the web, he or she would have had to begun back in the Cretaceous Age to get where we are today. But running in parallel it takes 114 million people working around the clock one year to produce one trillion urls. Since even the most maniacal webmaster sleeps every now and then, if we reduce the work day to only 8 hours, or one third of the day, then it will take 114 million folks 3 years of full-time work to produce the web. That is equal to 342 million worker-years.

But since we’ve had 15 years to construct this great work, we needed only 22.8 million webworkers working full-time for the past 15 years. At first glance that seems far more than I think we actually had.

A very large portion of this immense load of work has been done for free. In the past I calculated that 40% of the web was done non-commercially. But that included government and non-profits, which do pay their workers. I would guess that 80% of the web is produced for pay. (If you have a better figure, post a comment.) So we take 80% of 342 million worker-years to get 273 million paid worker years. What is their salaries worth? In other words, what is the replacement cost of the web today? If all the back-up hard drives disappeared and we had to reconstruct the trillion urls of the web, what would it cost? Or in other words how much would it cost to refill the content of the One Machine?

At the bargain rate of $20,000 per year, it would cost $5.4 trillion. Multiply or divide that by whatever factor you think is necessary to make the salary more realistic. Maybe it takes less than an hour on average to create the content of a web page; maybe it takes more. But I suspect the order of magnitude is close.

In the first 6,000 days of the web we’ve put in a trillion hours, a trillion pages, and 5 trillion dollars. What can we expect in the next 6,000 days?