How Will the Miracle Happen Today?

When I was in my twenties I would hitchhike to work every day. I’d walk down three blocks to Route 22 in New Jersey, stick out my thumb and wait for a ride to work. Someone always picked me up. I had to punch-in for my job as a packer at a warehouse at 8 o’clock sharp, and I can’t remember ever being late. It never ceased to amaze me even then, that the kindness of strangers could be so dependable. Each day I counted on the service of ordinary commuters who had lives full of their own worries, and yet without fail, at least one of them would do something kind, as if on schedule. As I stood there with my thumb outstretched, the question in my mind was simply: “How will the miracle happen today?”

Shortly after that rare stint of a real job, I took my wages and split for Asia, where I roamed off and on for the next 8 years. I lost track of the number of acts of kindness aimed at me, but they arrived as dependably as my daily hitchhiking miracle. Random examples: In the Philippines a family living in a shack opened their last can of tinned meat as a banquet for me, a stranger who needed a place to crash. Below a wintry pass north of Gilgit in the Pakistan Himalayas, a group of startled firewood harvesters shared their tiny shelter and ash-baked bread with me when I bounded unannounced into their campfire circle one evening. We ended up sleeping like sardines under a single home-woven blanket while snow fell. In Taiwan, a student I met on the street one day befriended me in that familiar way to most travelers, but surprised me by offering me a place at his family’s apartment in Taipei. While he was away at school, I sat in on the family meals and had my own bedroom for two weeks.

One remembrance triggers another; I could easily list thousands of such gestures without much trouble, because – and this is important – not only did I readily accept such gifts, but I eventually came to rely on them being offered. I could never guess who the messenger would be, but kindness never failed to materialize once I put myself in some position to receive it.

As in my hitchhiking days, I began my days on the road in Asia and elsewhere with the recurring question “how will the miracle happen today?” After a lifetime of relying on such benevolence I have developed a theory of what happens in these moments and it goes like this.

Kindness is like a breath. It can be squeezed out, or drawn in. You can wait for it, or you can summon it. To solicit a gift from a stranger takes a certain state of openness. If you are lost or ill, this is easy, but most days you are neither, so embracing extreme generosity takes some preparation. I learned from hitchhiking to think of this as an exchange. During the moment the stranger offers his or her goodness, the person being aided can reciprocate with degrees of humility, dependency, gratitude, surprise, trust, delight, relief, and amusement to the stranger. It takes some practice to enable this exchange when you don’t feel desperate. Ironically, you are less inclined to be ready for the gift when you are feeling whole, full, complete, and independent!

One might even call the art of accepting generosity a type of compassion. The compassion of being kinded.

One year I rode my bicycle across America, from San Francisco to New York. I started out camping in state parks, but past the Rockies, parks became scarce, so I switched to camping on people’s lawns. I worked up a routine. As darkness fell, I began scouting the homes I passed for a likely candidate: neat house, big lawn in the back, easy access for my bike. When I selected the lucky home, I parked my bag-loaded bike in front of the door and rang the bell. “Hello,“ I’d say. “I’m riding my bike across America. I’d like to pitch my tent tonight where I have permission and where someone knows where I am. I’ve just eaten dinner, and I’ll be gone first thing in the morning. Would you mind if I put up my tent in your backyard?”

I was never turned away, not once. And there was always more. It was impossible for most folks to sit on their couch and watch TV while a guy who was riding his bicycle across America was camped in their backyard. What if he was famous? So I was usually invited into their home for desert and an interview. My job in this moment was evident: I was to relate my adventure. I was to help them enjoy a thrill they secretly desired, but would never accomplish. My account in their kitchen would make this legendary ride part of their lives. Through me and my retelling of my journey, they would get to vicariously ride a bicycle across America. In exchange I would get a place to camp and a dish of ice cream. It was a sweet deal that benefited both of us.

The weird thing is that I was, and still am, not sure whether I would have done what they did and let me sleep in the backyard. The “me” on the bicycle had a wild tangled beard, had not showered for weeks, and appeared destitute (my whole transcontinental trip cost me $500). I am not sure I would invite a casual tourist I met to take over my apartment, and cook for him, as many have done for me. I definitely would not hand him the keys to my own car, as a hotel clerk in Dalarna, Sweden, did one mid-summer day when I asked her how I could reach the painter Carl Larsson’s house 150 miles away.

The many times I was down or dazed, and a stranger interrupted their life to assist me is a less perplexing mystery to me that when, for no noble reason at all, an impoverished legendary Chinese painter insists that I take one of his treasures. I’d like to think that I would without hesitation drive far out of my way to bring a sick traveler to the hospital (in the Philippines), but I am having trouble seeing myself emptying my bank account to purchase a boat ticket for someone who has more money than I do. And if I were a cold drink seller in Oman, I would definitely not give cold drinks away for free just because the recipient was a guest in my poor country. But those kind of illogical blessings happen when you are open to a gift.

Yet while I rely on miracles, I don’t believe in saints. There are no saints even among the gentle monks of Asia, or I should say, especially among the monks. Rather, generosity is rampant in everyday lives, but no more in one place, race, or creed than others. We expect altruism among kinfolk and neighbors, although the world would, as we all know, be a better place if neighborhood and family kindness happened even more.

Altruism among strangers, on the other hand, is simply strange. To the uninitiated its occurrence seems as random as cosmic rays. A hit or miss blessing that makes a good story. The kindness of strangers is gift we never forget.

But the strangeness of “kindees” is harder to explain. A kindee is what you turn into when you are kinded. Curiously, being a kindee is an unpracticed virtue. Hardly anyone hitchhikes any more, which is a shame because it encourages the habit of generosity from drivers, and it nurtures the grace of gratitude and patience of being kinded from hikers. But the stance of receiving a gift – of being kinded -- is important for everyone, not just travelers. Many people resist being kinded unless they are in dire life-threatening need. But a kindee needs to accept gifts more easily. Since I have had so much practice as a kindee, I have some pointers on how it is unleashed.

I believe the generous gifts of strangers are actually summoned by a deliberate willingness to be helped. You start by surrendering to your human need for help. That we cannot be helped until we embrace our need for help is another law of the universe. Receiving help on the road is a spiritual event triggered by a traveler who surrenders his or her fate to the eternal Good. It’s a move away from whether we will be helped, to how: how will the miracle unfold today? In what novel manner will Good reveal itself? Who will the universe send today to carry away my gift of trust and helplessness?

When the miracle flows, it flows both ways. When an offered gift is accepted, then the threads of love are knotted, snaring both the stranger who is kind, and the stranger who is kinded. Every time a gift is tossed it lands differently – but knowing that it will arrive in some colorful, unexpected way is one of the certainties of life.

We are at the receiving end of a huge gift simply by being alive. It does not matter how you calculate it, our time here is unearned. Maybe you figure your existence is the result of a billion unlikely accidents, and nothing more; then certainly your life is an unexpected lucky and undeserved surprise. That’s the definition of a gift. Or maybe you figure there’s something bigger behind this small human reality; your life is then a gift from the greater to the lesser. As far as I can tell none of us have brought about our own existence, nor done much to earn such a remarkable experience. The pleasures of colors, cinnamon rolls, bubbles, touchdowns, whispers, long conversations, sand on your bare feet – these are all undeserved rewards.

All of us begin in the same place. Whether sinner or saint, we are not owed our life. Our existence is an unnecessary extravagance, a wild gesture, an unearned gift. Not just at birth. The eternal surprise is being funneled to us daily, hourly, minute by minute, every second. As you read these words, you are rinsed with the gift of time. Yet, we are terrible recipients. We are no good at being helpless, humble, or indebted. Being needy is not celebrated on day-time TV shows, or in self-help books. We make lousy kindees.

I’ve slowly changed my mind about spiritual faith. I once thought it was chiefly about believing in an unseen reality; that it had a lot in common with hope. But after many years of examining the lives of the people whose spiritual character I most respect, I’ve come to see that their faith rests on gratitude, rather than hope. The beings I admire exude a sense of knowing they are indebted, of resting upon a state thankfulness. They recognize they are at the receiving end of an ongoing lucky ticket called being alive. When the truly faithful worry, it’s not about doubt (which they have); it’s about how they might not maximize the tremendous gift given them. How they might be ungrateful by squandering their ride. The faithful I admire are not certain about much except this: that this state of being embodied, inflated with life, brimming with possibilities, is so over-the-top unlikely, so extravagant, so unconditional, so far out beyond physical entropy, that is it indistinguishable from love. And most amazing of all, like my hitchhiking rides, this love gift is an extravagant gesture you can count on. This is the meta-miracle: that the miracle of gifts is so dependable. No matter how bad the weather, soiled the past, broken the heart, hellish the war – all that is behind the universe is conspiring to help you – if you will let it.

My new age friends call that state of being pronoia, the opposite of paranoia. Instead of believing everyone is out to get you, you believe everyone is out to help you. Strangers are working behind your back to keep you going, prop you up, and get you on your path. The story of your life becomes one huge elaborate conspiracy to lift you up. But to be helped you have to join the conspiracy yourself; you have to accept the gifts.

Although we don’t deserve it, and have done nothing to merit it, we have been offered a glorious ride on this planet, if only we accept it. To receive the gift requires the same humble position a hitchhiker gets into when he stands shivering on the side of the empty highway, cardboard sign flapping in the cold wind, and says, “How will the miracle happen today?”

Gar's Tips & Tools - Issue #207

10 Great Tool Gift Ideas

I love it when smart and talented makers that I follow give their annual tool recommendations. Case-in-point is Chris Notap’s tool gift guide for 2025. Every one of these is a winner, from the “I am totally ordering a bunch of these for presents” Olight iMini flashlight to the “I also have and highly recommend” DeWalt Oscillating Tool. Besides being a great tool, it will always have a soft spot in my heart ‘cause it’s the last tool my dear ol’ dad gave to me before he died. Other standouts include the Knipex 12” pliers wrench, which looks amazing, the Apple AirTag battery life extender, and the BlueDriver Bluetooth car scan tool.

Tips & Tricks for Using JB Weld

David Riddle’s video on J-B Weld (and J-B Kwik) is one of those tutorials that instantly upgrades your knowledge and approach to a material or process. His whole philosophy for using J-B Weld boils down to: Preparation is everything. Sand until you’ve got some adequate tooth, clean with acetone, mix on something non-absorbent (don’t use paper, card, etc.), spread a thin, even film (he uses a plastic knife like it was a tiny mason’s trowel), and warm the epoxy so it flows into the tooth instead of sitting on the bonding surface. No heavy clamping, no cardboard mixing trays, no wishing. Just clean surfaces, good texture, and slow-cure J-B Weld doing what it does best.

Some Practical 3D Printed Tools

As you likely know, there’s such a profusion of 3D printable tools out there (with many of them less than adequate as serious tool replacements) that the whole category of videos about them are easy to ignore. In this video, Peter Brown prints and tests five actually useful tools. The big aha for me here was the hex-key handles. I hate futzing with hex-keys, especially went you can’t bring proper torque to bear. These handsome little handles solve for that. Other stand-out prints include a snappy one-handed broom hook, a router bits organizer (from Zack Freedman’s Gridfinity system), and a rare earth magnet dispenser.

The History of the Allen Hex Key Wrench

We are all intimately familiar with that little L-shaped tool that carries a dude’s name. The Allen hex key wrench is so ordinary it’s basically shop and household wallpaper. Yet its impact on manufacturing and domestic life are undeniable. This episode of History of Simple Things (a channel I just discovered) explores how a small Hartford company, and an engineer named William G. Allen, helped de-thrown the slippery, injury-prone slotted screw and reshape modern manufacturing in the process. It’s a century-long tale of safety, standardization, and one odd bit of branding that stuck like Velcro and Kleenex. The video is a reminder that even the homeliest of tools have hidden lineages worth appreciating, especially the ones rattling around the bottoms of your kitchen junk drawers.

Regularly Rethinking Your Org

I’m not the most organized person in the world. I’m not terrible—I have my moments of clarity and tidy thinking, but I’m not obsessive or even particularly consistent about it. An example: For years I’ve had a 5-drawer wooden rolly cart by my workbench. I have most of my day-to-day tools on or around the bench, and this cart has additional tools and drawers organized by different activities: small supplies, painting, sanding, etc. Only the top drawer is tool-devoted, and over the years, it has become crammed with tools. Yesterday, I realized that the top drawer includes tools I use on a regular basis mixed in with tools I rarely use. This while some drawers in the cart are filled with materials I might only use once or twice a year. So, I moved those materials to shelves and created two tool drawers: one for everyday tools, one for special-use tools. I can already tell what a difference this will make as I quickly reach for a tool and don’t have to spend 5-minutes shifting and untangling stuff to get it in hand. The takeaway for me is that I’m going to start re-organizational thinking on a regular cadence (every quarter?). Pick some area of my shop and ask myself: Is this really the right system? How am I actually using the space and the tools, supplies, and materials within it? What can I improve?” I don’t do this type of thinking nearly enough. Do you?

Maker’s Muse

Some real eye-opening and inspiring ideas here for gates, doors, windows, skylights, pool covers, and more.

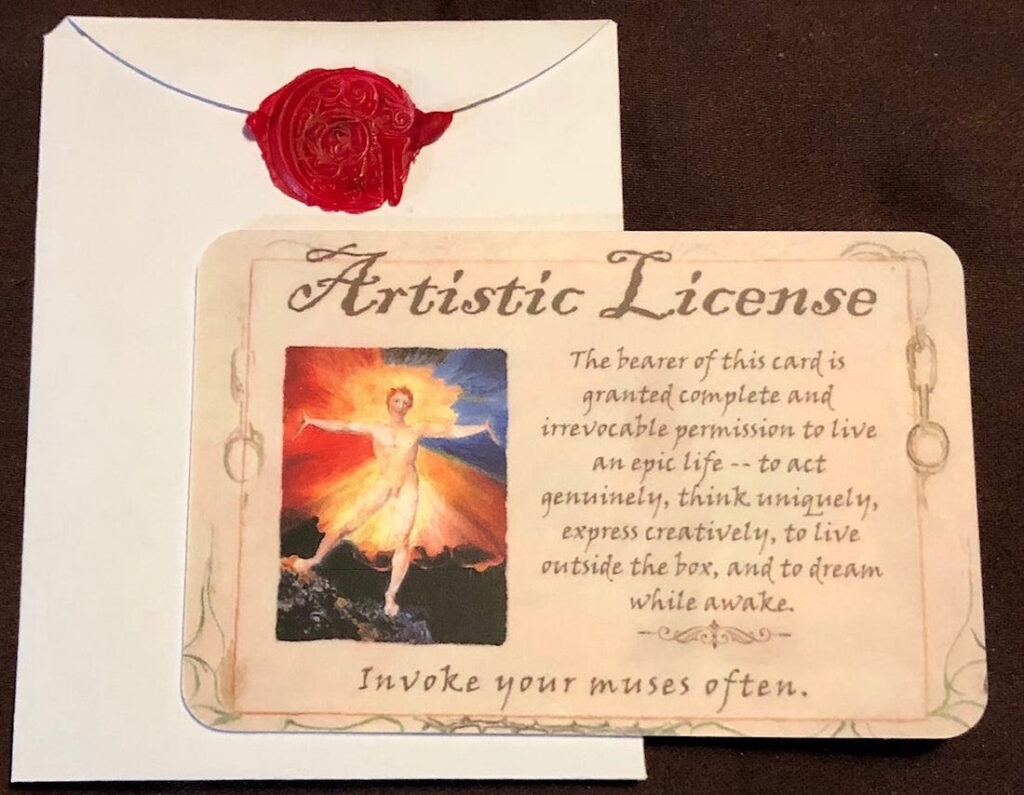

Do You Need an Artistic License?

I created this Artistic License years ago and have been selling them every holiday season. I hear from people all the time that they still have theirs in their wallet and get a kick out of it when they encounter it. Come on, admit it, we all want to feel like we have artistic license. I sell them for $5 each, postpaid, or 5 for $20 (pp). Foreign orders require the exact shipping cost. Message me at garethbranwyn@mac.com if interested.

Your Chance to Win a Flashlight!

I was so intrigued by the Olight iMini flashlight that Chris Notap recommended above that I immediately bought one, and man, is it a cool little bit o’ kit. It’s everything he says and more. Every keychain (and home, car, boat, shop) needs one. So, I decided to give three of them to newsletter readers!

Here’s how it works:

- Every existing newsletter subscriber will get one entry in the drawing. Paid subscribers will bet two entries.

- For everyone who brings on a new subscriber, you’ll get one drawing entry for each new signee (just email me the addresses they used to sign up).

- For everyone that upgrades to a paid subscription, you will get three entries.

I will do the drawing on Dec 16th and have the lights direct-shipped immediately. I can only mail to US subscribers. If you are out of the country and you win, I will send you a PDF copy of my book Tips and Tales from the Workshop, Vol. 2.

Paying AIs to Read My Books

Some authors have it backwards. They believe that AI companies should pay them for training AIs on their books. But I predict in a very short while, authors will be paying AI companies to ensure that their books are included in the education and training of AIs. The authors (and their publishers) will pay in order to have influence on the answers and services the AIs provide. If your work is not known and appreciated by the AIs, it will be essentially unknown.

Recently, the AI firm Anthropic agreed to pay book authors a collective $1.5 billion as a penalty for making an illegal copy of their books. Anthropic had been sued by some authors for using a shadow library of 500,000 books that contained digital versions of their books, all collected by renegade librarians with the dream of making all books available to all people. Anthropic had downloaded a copy of this outlaw library in anticipation of using it to train their LLMs, but according to court documents, they did not end up using those books for training the AI models they released. Even if Anthropic did not use this particular library, they used something similar, and so have all the other commercial frontier LLMs.

However the judge penalized them for making an unauthorized copy of the copyrighted books, whether or not they used them, and the authors of all the copied books were awarded $3,000 per book in the library.

The court administrators in this case, called Bartz et al v. Anthropic, have released a searchable list of the affected books on a dedicated website. Anyone can search the database to see if a particular book or author is included in this pirate library, and of course, whether they are due compensation. My experience with class action suites like this is that very rarely does award money ever reach people on the street. Most of the fees are consumed by the lawyers of all sides. I notice that in this case, only half of the amount paid per book is destined to actually go to the author. The other 50% goes to the publishers. Maybe. And if it is a text book, good luck with getting anything.

I am an author so I checked the Anthropic case list. I found four out of my five books published in New York included in this library. I feel honored to be included in a group of books that can train AIs that I now use everyday. I feel flattered that my ideas might be able to reach millions of people through the chain of thought of LLMs. I can imagine some authors feeling disappointed that their work was not included in this library.

However, Anthropic claims it did not use this particular library for training their AIs. They may have used other libraries and those libraries may or may not have been “legal” in the sense of having been paid for. The legality of using digitized books for anything is still in dispute. For example, Google digitizes books for search purposes, but only shows small snippets of the book as the result. Can they use the same digital copy they have already made for training AI purposes? The verdict in the Bartz v. Anthropic case was that, yes, using a copy of a book for training AI is fair use, if it was obtained in a fair way. Anthropic was penalized not for training AI on books, but for having in its possession a copy of the books it had not paid for.

This is just the first test case of what promises to be many more tests in the future as it is clear that copyright law is not adequate to cover this new use of text. Protecting copies of text – which is what copyright provisions do – is not really pertinent to learning and training. AIs don’t need to keep a copy; they just have to read it once. Copies are immaterial. We probably need other types of rights and licenses for intellectual property, such as a Right of Reference, or something like that. But the rights issue is only a distraction from the main event, which is the rise of a new audience: the AIs.

Slowly, we’ll accumulate some best practices in regards to what is used to train and school AIs. The curation of the material used to educate the AI agents giving us answers will become a major factor in deciding whether we use and rely on them. There will be a minority of customers who want the AIs to be trained with material that aligns with their political bent. Devout conservatives might want a conservatively trained AI; it will give answers to controversial questions in the manner they like. Devout liberals will want one trained with a liberal education. The majority of people won’t care; they just want the “best” answer or the most reliable service. We do know that AIs reflect what they were trained on, and that they can be “fine tuned” with human intervention to produce answers and services that please their users. There is a lot of research in reinforcing their behavior and steering their thinking.

Half a million books sounds like a lot of books to learn from, but there are millions and millions of books in the world already that the AIs have not read because their copyright status is unclear or inconvenient, or they are written in lesser-used languages. AI training is nowhere near done. Shaping this corpus of possible influences will become a science and art in itself. Someday AIs will have really read all that humans have written. Having only 500,000 books forming your knowledge base will soon be seen as quaint, but it also suggests how impactful it can be to be included in that small selection, and that makes inclusion a prime reason why authors will want their works to be trained on AIs now.

The young and the earliest adopters of AI have it set to always-on mode; more and more of their intangible life goes through the AI, and no further. As the AI models become more and more reliable, the young are accepting the conclusions of the AI. I find something similar in my own life. I long ago stopped questioning a calculator, then stopped questioning Google, and now find that most answers from current AIs are pretty reliable. The AIs are becoming the arbiters of truth.

AI agents are used not just to give answers but to find things, to understand things, to suggest things. If the AIs do not know about it, it is equivalent to it not existing. It will become very hard for authors who opt out of AI training to make a dent. There are authors and creators today who do not have any digital presence at all; you cannot find them online; their work is not listed anywhere. They are rare and a minority. As Tim O’Reilly likes to say, the challenge today for most creators is not piracy (illegal copies) but obscurity. I will add, the challenge for creators in the future will not be imitation (AI copy) but obscurity.

If AIs become the arbiters of truth, and if what they trained on matters, then I want my ideas and creative work to be paramount in what they see. I would very much like my books to be the textbooks for AI. What author would not? I would. I want my influence to extend to the billions of people coming to the AIs everyday, and I might even be willing to pay for that, or to at least do what I can to facilitate the ingestion of my work into the AI minds.

Another way to think of this is that in this emerging landscape, the audience for books – especially non-fiction books – has shifted away from people towards AI. If you are writing a book today, you want to keep in mind that you are primarily writing it for AIs. They are the ones who are going to read it the most carefully. They are going to read every page word by word, and all the footnotes, and all the endnotes, and the bibliography, and the afterward. They will also read all your books and listen to all your podcasts. You are unlikely to have any human reader read it as thoroughly as the AIs will. After absorbing it, the AIs will do that magical thing of incorporating your text into all the other text they have read, of situating it, of placing it among all the other knowledge of the world – in a way no human reader can do.

Part of the success of being incorporated by AIs is how well the material is presented for them. If a book can be more easily parsed by an AI, its influence will be greater. Therefore many books will be written and formatted with an eye on their main audience. Writing for AIs will become a skill like any other, and something you can get better at. Authors could actively seek to optimize their work for AI ingestion, perhaps even collaborating with AI companies to ensure their content is properly understood, and integrated. The concept of "AI-friendly" writing, with clear structures, explicit arguments, and well-defined concepts, will gain prominence, and of course will be assisted by AI.

Every book, song, play, movie we create is added to our culture. Libraries are special among human inventions. They tend to get better the older they get. They accumulate wisdom and knowledge. The internet is similar in this way, in that it keeps accumulating material and has never crashed, or had to restart, since it began. AIs are very likely similar to these exotropic systems, accumulating endlessly without interruption. We don’t know for sure, but they are liable to keep growing for decades if not longer. At the moment their growth seems open ended. What they learn today, they will probably continue to know, and their impact today will have compounding influence in the decades to come. Influencing AIs is among the highest leverage activities available to any human being today, and the earlier you start, the more potent.

The value of an author's work will not just be in how well it sells among humans, but how deep it has been included within the foundational knowledge of these intelligent memory-based systems. That potency will be what is boasted about. That will be an author’s legacy.

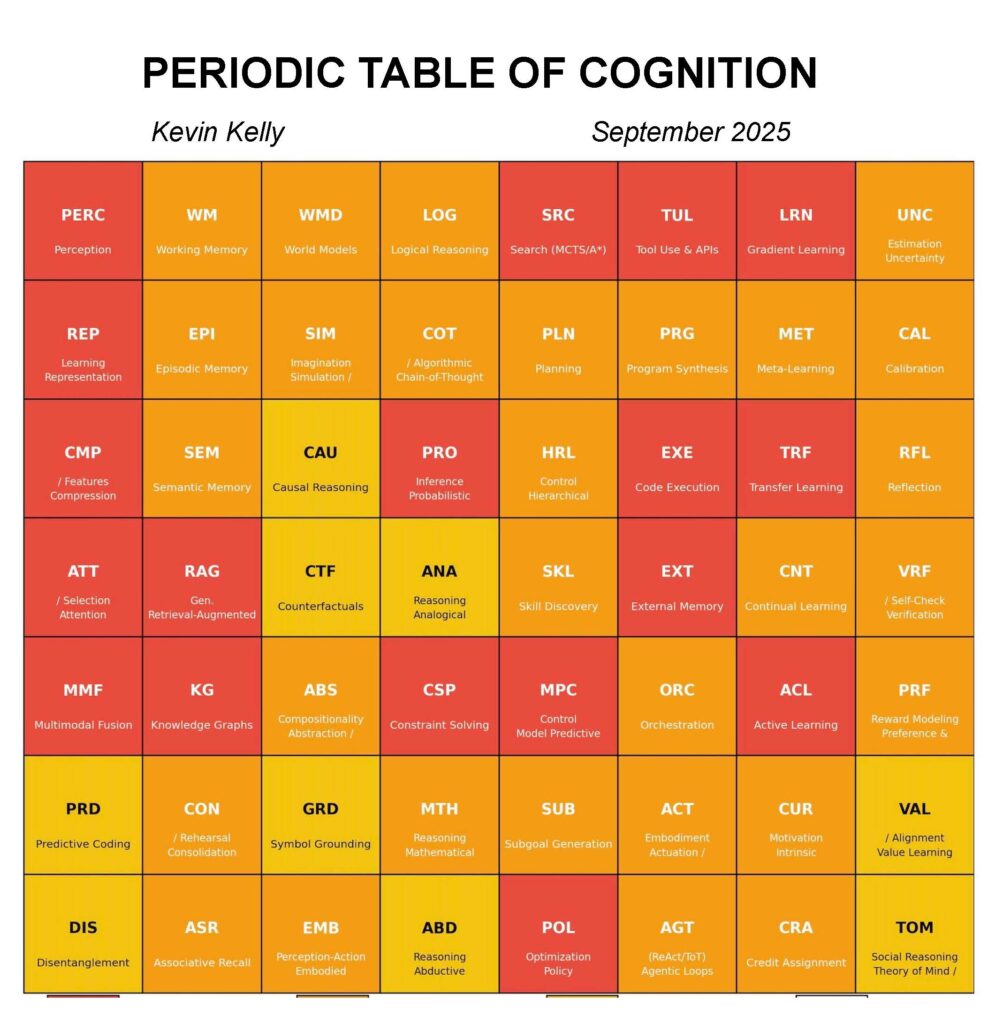

The Periodic Table of Cognition

I’ve been studying the early history of electricity’s discovery as a map for our current discovery of artificial intelligence. The smartest people alive back then, including Isaac Newton, who may have been the smartest person who ever lived, had confident theories about electricity’s nature that were profoundly wrong. In fact, despite the essential role of electrical charges in the universe, everyone who worked on this fundamental force was profoundly wrong for a long time. All the pioneers of electricity — such as Franklin, Wheatstone, Faraday, and Maxwell — had a few correct ideas of their own (not shared by all) mixed in with notions that mostly turned out to be flat out misguided. Most of the discoveries about what electricity could do happened without the knowledge of how they worked. That ignorance, of course, drastically slowed down the advances in electrical inventions.

In a similar way, the smartest people today, especially all the geniuses creating artificial intelligence, have theories about what intelligence is, and I believe all of them (me too) will be profoundly wrong. We don’t know what artificial intelligence is in large part because we don’t know what our own intelligence is. And this ignorance will later be seen as an impediment to the rate of progress in AI.

A major part of our ignorance stems from our confusion about the general category of either electricity or intelligence. We tend to view both electricity and intelligence as coherent elemental forces along a single dimension: you either have more of it or less. But in fact, electricity turned out to be so complicated, so complex, so full of counterintuitive effects that even today it is still hard to grasp how it works. It has particles and waves, and fields and flows, composed of things that are not really there. Our employment of electricity exceeds our understanding of it. Understanding electricity was essential to understanding matter. It wasn’t until we learned to control electricity that we were able to split water — which had been considered an element — into its actual elements; that enlightened us that water was not a foundational element, but a derivative compound made up of sub elements.

It is very probable we will discover that intelligence is likewise not a foundational singular element, but a derivative compound composed of multiple cognitive elements, combined in a complex system unique to each species of mind. The result that we call intelligence emerges from many different cognitive primitives such as long-term memory, spatial awareness, logical deduction, advance planning, pattern perception, and so on. There may be dozens of them, or hundreds. We currently don’t have any idea of what these elements are. We lack a periodic table of cognition.

The cognitive elements will more resemble the heavier elements in being unstable and dynamic. Or a better analogy would be to the elements in a biological cell. The primitives of cognition are flow states that appear in a thought cycle. They are like molecules in a cell which are in constant flux, shifting from one shape to another. Their molecular identity is related to their actions and interactions with other molecules. Thinking is a collective action that happens in time (like temperature in matter) and every mode can only be seen in relation to the other modes before and after it. It is a network phenomenon that makes it difficult to identify its borders. So each element of intelligence is embedded in a thought cycle, and requires the other elements as part of its identity. So each cognitive element is described in context of the other cognitive modes adjacent to it.

I asked ChatGPT5Pro to help me generate a periodic table of cognition given what we collectively know so far. It suggests 49 elements, arranged in a table so that related concepts are adjacent. The columns are families, or general categories of cognition such as “Perception”, “Reasoning”, “Learning”, so all the types of perception or reasoning are stacked in one column. The rows are sorted by stages in a cycle of thought. The earlier stages (such as “sensing”) are at the top, while later stages in the cycle (such as “reflect & align”) are at the bottom. So for example, in the family or category of “Safety” the AIs will tend to do the estimation of uncertainty first, later do verification, and only get to a theory of mind at the end.

The chart is colored according to how much progress we’ve made on each element. Red indicates we can synthesize that element in a robust way. Orange means we can kind of make it work with the right scaffolding. Yellow reflects promising research without operational generality yet.

I suspect many of these elements are not as distinct as shown here (taxonomically I am more of a lumper than a splitter), and I would expect this collection omits many types we are soon to discover, but as a start, this prototype chart serves its purpose: it reveals the complexity of intelligence. It is clear intelligence is compounded along multiple dimensions. We will engineer different AIs to have different combinations of different elements in different strengths. This will produce thousands of types of possible minds. We can see that even today different animals have their own combination of cognitive primitives, arranged in a pattern unique to their species’ needs. In some animals some of the elements — say long-term memory — may exceed our own in strength; of course they lack some elements we have.

With the help of AI, we are discovering what these elements of cognition are. Each advance illuminates a bit of how minds work and what is needed to achieve results. If the discovery of electricity and atoms has anything to teach us now, it is that we are probably very far from having discovered the complete set of cognitive elements. Instead we are at the stage of believing in ethers, instantaneous action, and phlogiston – a few of the incorrect theories of electricity the brightest scientists believed.

Almost no thinker, researcher, experimenter, or scientist at that time could see the true nature of electricity, electromagnetism, radiation and subatomic particles, because the whole picture was hugely unintuitive. Waves, force fields, particles of atoms did not make sense (and still does not make common sense). It required sophisticated mathematics to truly comprehend it, and even after Maxwell described it mathematically, he found it hard to visualize.

I expect the same from intelligence. Even after we identify its ingredients, the emergent properties they generate are likely to be obscure and hard to believe, hard to visualize. Intelligence is unlikely to make common sense.

A century ago, our use of electricity ran ahead of our understanding of it. We made motors from magnets and coiled wire without understanding why they worked. Theory lagged behind practice. As with electricity, our employment of intelligence exceeds our understanding of it. We are using LLMs to answer questions or to code software without having a theory of intelligence. A real theory of intelligence is so lacking that we don’t know how our own minds work, let alone the synthetic ones we can now create.

The theory of the atomic world needed the knowledge of the periodic table of elements. You had to know all (or at least most) of the parts to make falsifiable predictions of what would happen. The theory of intelligence requires knowledge of all the elemental parts, which we have only slowly begun to identify, before we can predict what might happen next.

The Trust Quotient (TQ)

Wherever there is autonomy, trust must follow. If we raise children to go off on their own, they need to be autonomous and we need to trust them. (Parenting is a school for learning how to trust.) If we make a system of autonomous agents, we need lots of trust between agents. If I delegate decisions to an AI, I then have to trust it, and if that AI relies on other AIs, it must trust them. Therefore we will need to develop a very robust trust system that can detect, verify, and generate trust between humans and machines, and more importantly between machines and machines.

Applicable research in trust follows two directions: understanding better how humans trust each other, and applying some of those principles in an abstract way into mechanical systems. Technologists have already created primitive trust systems to manage the security of data clouds and communications. For instance, should this device be allowed to connect? Can it be trusted to do what it claims it can do? How do we verify its identity, and its behavior? And so on.

So far these systems are not dealing with adaptive agents, whose behaviors and IDs and abilities are far more fluid, opaque, shifting, and also more consequential. That makes trusting them more difficult and more important.

Today when I am shopping for an AI, accuracy is the primary quality I am looking for. Will it give me correct answers? How much does it hallucinate? These qualities are proxies for trust. Can I trust the AI to give me an answer that is reliable? As AIs start to do more, to go out into the world to act, to make decisions for us, their trustworthiness becomes crucial.

Trust is a broad word that will be unbundled as it seeps into the AI ecosystem. Part security, part reliability, part responsibility, and part accountability, these strands will become more precise as we synthesize it and measure it. Trust will be something we’ll be talking a lot more about in the coming decade.

As the abilities and skills of AI begin to differentiate – some are better for certain tasks than others – reviews of them will begin to include their trustworthiness. Just as other manufactured products have specs that are advertised – such as fuel efficiency, or gigabytes of storage, pixel counts, or uptime, or cure rates – so the vendors of AIs will come to advertise the trust quotient of their agents. How reliably reliable are they? Even if this quality is not advertised it needs to be measured internally, so that the company can keep improving it.

When we depend on our AI agent to book vacation tickets, or renew our drug prescriptions, or to get our car repaired, we will be placing a lot of trust in them. It is not hard to imagine occasions where an AI agent can be involved in a life or death decision. There may even be legal liability consequences for how much we can expect to trust AI agents. Who is responsible if the agent screws up?

Right now, AIs own no responsibilities. If they get things wrong, they don't guarantee to fix it. They take no responsibility for the trouble they may cause with their errors. In fact, this difference is currently the key difference between human employees and AI workers. The buck stops with the humans. They take responsibility for their work; you hire humans because you trust them to get the job done right. If it isn't, they redo it, and they learn how to not make that mistake again. Not so with current AIs. This makes them hard to trust.

AI agents will form a network, a system of interacting AIs, and that system can assign a risk factor for each task. Some tasks, like purchasing airline tickets, or assigning prescription drugs, would have risk scores reflecting potential negative outcomes vs positive convenience. Each AI agent itself would have a dynamic risk score depending on what its permissions were. Agents would also accumulate trust scores based on their past performances. Trust is very asymmetrical; It can take many interactions over a long time to gain in value, but it can lose trust instantly, with a single mistake. The trust scores would be constantly changing, and tracked by the system.

Most AI work will be done invisibly, as agent to agent exchanges. Most of the output generated by an average AI agent will only be seen and consumed by another AI agent, one of trillions. Very little of the total AI work will ever be seen or noticed by humans. The number of AI agents that humans interact with will be very few, although they will loom in importance to us. While the AIs we engage with will be rare statistically, they will matter to us greatly, and their trust will be paramount.

In order to win that trust from us, an outward facing AI agent needs to connect with AI agents it can also trust, so a large part of its capabilities will be the skill of selecting and exploiting the most trustworthy AIs it can find. We can expect whole new scams, including fooling AI agents into trusting hollow agents, faking certificates of trust, counterfeiting IDs, spoofing tasks. Just as in the internet security world, an AI agent is only as trustworthy as its weakest sub-agent. And since sub-tasks can be assigned for many levels down, managing quality will be a prime effort for AIs.

Assigning correct blame for errors and rectifying mistakes also becomes a huge marketable skill for AIs. All systems – including the best humans – make mistakes. There can be no system mistake proof. So a large part of high trust is the accountability in mending one’s errors. The highest trusted agents will be those capable (and trusted!) to fix the mistakes they make, to have sufficient smart power to make amends, and get it right.

Ultimately the degree of trust we give to our prime AI agent — the one we interact with all day every day — will be a score that is boasted about, contested, shared, and advertised widely. In other domains, like a car or a phone, we take reliability for granted.

AI is so much more complex and personal, unlike other products and services in our lives today,

the trustworthiness of AI agents will be crucial and an ongoing concern. Its trust quotient (TQ) may be more important than its intelligence quotient (IQ). Picking and retaining agents with high TQ will be very much like hiring and keeping key human employees.

However, we tend to avoid assigning numerical scores to humans. The AI agent system, on the other hand will have all kinds of metrics we will use to decide which ones we want to help run our lives. The highest scoring AIs will likely be the most expensive ones as well. There will be whispers of ones with nearly perfect scores that you can't afford. However, AI is a system that improves with increasing returns, which means the more it is used, the better it gets, so the best AIs will be among the most popular AIs. Billionaires use the same Google we use, and are likely to use the same AIs as us, though they might have intensely personalized interfaces for them. These too, will need to have the highest trust quotients.

Every company, and probably every person, will have an AI agent that represents them inside the AI system to other AI agents. Making sure your personal rep agent has a high trust score will be part of your responsibility. It is a little bit like a credit score for AI agents. You will want a high TQ for yours. Because some AI agents won’t engage with other agents having low TQs. This is not the same thing as having a personal social score (like the Chinese are reputed to have). This is not your score, but the TQ score of your agent, which represents you to other agents. You could have a robust social score reputation, but your agent could be lousy. And vice versa.

In the coming decades of the AI era, TQ will be seen as more important than IQ.

Emotional Agents

Many people have found the intelligence of AIs to be shocking. This will seem quaint compared to a far bigger shock coming: highly emotional AIs. The arrival of synthetic emotions will unleash disruption, outrage, disturbance, confusion, and cultural shock in human society that will dwarf the fuss over synthetic intelligence. In the coming years the story headlines will shift from “everyone will lose their job” (they won’t) to “AI partners are the end of civilization as we know it.”

We can rationally process the fact that a computer could legitimately be rational. We may not like it, but we could accept the fact that a computer could be smart, in part because we have come to see our own brains as a type of computer. It is hard to believe they could be as smart as we are, but once they are, it kind of makes sense.

Accepting machine-made creativity is harder. Creativity seems very human, and it is in some ways perceived as the opposite of rationality, and so it does not appear to belong to machines, as rationality does.

Emotions are interesting because emotions clearly are not only found in humans, but in many, many animals. Any pet owner could list the ways in which their pets perceive and display emotions. Part of the love of animals is being able to resonate with them emotionally. They respond to our emotions as we respond to theirs. There are genuine, deep emotional bonds between human and animal.

Those same kinds of emotional bonds are coming to machines. We see glimmers of it already. Nearly every week a stranger sends me logs of their chats with an AI demonstrating how deep and intuitive they are, how well they understand each other, and how connected they are in spirit. And we get reports of teenagers getting deeply wrapped up with AI “friends.” This is all before any serious work has been done to deliberately embed emotions into the AIs.

Why will we program emotions into AIs? For a number of reasons:

First, emotions are a great interface for a machine. It makes interacting with them much more natural and comfortable. Emotions are easy for humans. We don’t have to be taught how to act, we all intuitively understand results such as praise, enthusiasm, doubt, persuasion, surprise, perplexity – which a machine may want to use. Humans use subtle emotional charges to convey non-verbal information, importance, and instruction, and AIs will use similar emotional notes in their instruction and communications.

Second, the market will favor emotional agents, because humans do. AIs and robots will continue to diversify, even as their basic abilities converge, and so their personalities and emotional character will become more important in choosing which one to use. If they are all equally smart, the one that is friendlier, or nicer, or a better companion, will get the job.

Thirdly, a lot of what we hope artificial agents will do, whether they are software AIs or hard robots, will require more than rational calculations. It will not be enough that an AI can code all night long. We are currently over rating intelligence. To be truly creative and capable of innovations, to be wise enough to offer good advice, will require more than IQ. The bots need sophisticated emotional dynamics that are deeply embedded in its software.

Is that even possible? Yes.

There are research programs (such as those at MIT) going back decades figuring out how to distill emotions into attributes that can be ported over to machines. Some of this knowledge pertains to ways of visually displaying emotions in hardware, just as we do with our own faces. Other researchers have extracted ways we convey emotion with our voice, and even in words in a text. Recently we’ve witnessed AI makers tweaking how complimentary and “nice” their agents are because some users didn’t like their new personality, and some simply did not like the change in personality. While we can definitely program in personality and emotions, we don’t yet know which ones work best for a particular task.

Machines displaying emotions is only half of the work. The other half is detection and comprehension of human emotions by machines. Relationships are two way, and in order to truly be an emotional agent, it must get good at picking up your emotions. There has been a lot of research in that field, primarily in facial recognition, not just your identity, but how you are feeling. There are commercially released apps that can watch a user at their keyboard and detect whether they are depressed, or undergoing emotional stress. The extrapolation of that will be smart glasses that not only look out, but at the same time look back at your face to parse your emotions. Are you confused, or delighted? Surprised, or grateful? Determined, or relaxed? Already, Apple’s Vision Pro has backward facing cameras in its goggles that track your eyes and microexpressions such as blinks and eyebrow rises. Current text LLM’s make no attempt to detect your emotional state, except what can be gleaned from the letters in your prompt, but it is not technically a huge jump to do that.

In the coming years there will be lots of emotional experiments. Some AIs will be curt and logical; some will be talkative and extroverts. Some AIs will whisper, and only talk when you are ready to listen. Some people will prefer loud, funny, witty AIs that know how to make them laugh. And many commercial AIs will be designed to be your best friend.

We might find that admirable for an adult, but scary for a child. Indeed, there are tons of issues to be wary of when it comes to AIs and kids, not just emotions. But emotional bonds will be a key consideration in children’s AIs. Very young human children already can bond with, and become very close to inert dolls and teddy bears. Imagine if a teddy bear talked back, played with infinite patience, and mirrored their emotions. As the child grows it may not ever want to surrender the teddy. Therefore the quality of emotions in machines will likely become one of those areas where we have very different regimes, one for adults and one for children. Different rules, different expectations, different laws, different business models, etc.

But even adults will become very attached to emotional agents, very much like the movie Her. At first society will brand those humans who get swept up in AI love as delusional or mentally unstable. But just as most of the people who have deep love for a dog or cat are not broken, but well adjusted and very empathetic beings, so most of the humans that will have close relationships with AIs and bots will likewise see these bonds as wholesome and broadening.

The common fear about cozy relationships with machines is that they may be so nice, so smart, so patient, so available, so much more helpful than other humans around, that people will withdraw from human relationships altogether. That could happen. It is not hard to imagine well-intentioned people only consuming the “yummy easy friendships” that AIs offer, just as they are tempted to consume only the yummy easy calories of processed foods. The best remedy to counter this temptation is similar to fast food: education and better choices. Part of growing up in this new world will be learning to discern the difference between pretty perfect relationships and messy, difficult, imperfect human ones, and the value the latter give. To be your best — whatever your definition —requires that you spend time with humans!

Rather than ban AI relationships (or fast food) you moderated it, and keep it in perspective. Because in fact, the “perfect” behavior of an AI friend, mentor, coach, or partner can be a great role model. If you surround yourself with AIs that have been trained and tweaked to be the best that humans can make, this is fabulous way to improve yourself. The average human has very shallow ethics, and contradictory principles, and is easily swayed by their own base desires and circumstances. In theory, we should be able to program AIs to have better ethics and principles than the average human. In the same way, we can engineer AIs to be a better friend than the average human. Having these educated AIs around can help us to improve ourselves, and to become better humans. And the people who develop deep relationships with them have a chance to be the most well-adjusted and empathetic people of all.

The argument that the AIs’ emotions are not real because “the bots can’t feel anything” will simply be ignored. Just like the criticism of artificial intelligence being artificial and therefore not real because they don’t understand. It doesn’t matter. We don’t understand what “feeling” really means and we don’t even understand what “understand” means. These are terms and notions that are habitual but no longer useful. AIs do real things we used to call intelligence, and they will start doing real things we used to call emotions. Most importantly the relationships humans will have with AIs, bot, robots, will be as real and as meaningful as any other human connection. They will be real relationships.

But the emotions that AIs/bots have, though real, are likely to be different. Real, but askew. AIs can be funny, but their sense of humor is slightly off, slightly different. They will laugh at things we don’t. And the way they will be funny will gradually shift our own humor, in the same way that the way they play chess and go has now changed how we play them. AIs are smart, but in an unhuman way. Their emotionality will be similarly alien, since AIs are essentially artifical aliens. In fact, we will learn more about what emotions fundamentally are from observing them than we have learned from studying ourselves.

Emotions in machines will not arrive overnight. The emotions will gradually accumulate, so we have time to steer them. They begin with politeness, civility, niceness. They praise and flatter us, easily, maybe too easily. The central concern is not whether our connection with machines will be close and intimate (they will), nor whether these relationships are real (they are), nor whether they will preclude human relationships (they won’t), but rather who does your emotional agent work for? Who owns it? What is it being optimized for? Can you trust it to not manipulate you? These are the questions that will dominate the next decade.

Clearly the most sensitive data about us would be information stemming from our emotions. What are we afraid of? What exactly makes us happy? What do we find disgusting? What arouses us? After spending all day for years interacting with our always-on agent, said agent would have a full profile of us. Even if we never explicitly disclosed our deepest fears, our most cherished desires, and our most vulnerable moments, it would know all this just from the emotional valence of our communications, questions, and reactions. It would know us better than we know ourselves. This will be a common refrain in the coming decades, repeated in both exhilaration and terror: “My AI agent knows me better than I know myself.”

In many cases this will be true. In the best case scenario we use this tool to know ourselves better. In the worst case, this asymmetry in knowledge will be used to manipulate us, and expand our worst selves. I see no evidence that we will cease including AIs in our lives, hourly, if not by the minute. (There will be exceptions, like the Amish, who drop out but they will be a tiny minority.) Most of us, for most of the time, will have an intimate relationship with an AI agent/bot/robot that is always on, ready to help us in any way it can, and that relationship will become as real and as meaningful as any other human connection. We will willingly share our most intimate hours of our lives with it. On average we will lend it our most personal data as long as the benefits of doing so keep coming. (The gate in data privacy is not really who has it, but how much benefit do I get? People will share any kind of data if the benefits are great enough.)

Twenty five years from now, if the people whose constant companion is an always-on AI agent are total jerks, misanthropic bros, and losers, this will be the end of the story for emotional AIs. On the other hand, if people with a close relationship with an AI agent are more empathetic than average, more productive, distinctly unique, well adjusted, with a richer inner life, then this will be the beginning of the story.

We can steer the story to the beginning we want by rewarding those inventions that move us in that direction. The question is not whether AI will be emotional, but how we will use that emotionality.

Everything I Know about Self-Publishing

This essay is also available on my Substack. Subscribe here: https://kevinkelly.substack.com/

In my professional life, I’ve had several bestselling books published by New York publishers, as well as many other titles that sold modestly. I have also self-published a bunch of books, including one bestseller on Amazon and two massive hit Kickstarter-funded books. I have had lots of foreign edition books released by other publishers around the world, including bestsellers in those countries. Every year I also publish a few private books to give away. I've contracted books to be printed in the US and overseas. I've sold big coffee-table masterpieces and tiny text booklets. Together with partners, I run some notable newsletters, a very popular website, and a podcast with 420 episodes. I accumulated followers on various platforms. I'm often asked for advice about how to go about publishing today, with all its options, so here is everything I have learned about publishing and self-publishing so far.

The Traditional Route

The task: You create the material; then professionals edit, package, manufacture, distribute, promote, and sell the material. You make, they sell. At the appropriate time, you appear on a book store tour to great applause, to sign books and hear praise from fans. Also, the publishers will pay you even before you write your book. The advantages of this system are obvious: you spend your precious time creating, and all the rest of the chores will be done by people who are much better at those chores than you.

The downsides are also clear: Since the publisher controls the money, they control the edit, the title, the cover, the ads, the copyrights, and licenses. Your work becomes a community project, and it slows the whole process down, because yours is not the only project everyone is working on. Your work needs to fit into their lineup, their brand, their catalog, their pipeline, their schedule of all the other projects going on. The pace can seem glacial compared to the rest of the world.

For the most part, however, the peak of this traditional system is gone, finished, over. Reading habits have altered, buying habits are new, and attention has shifted to new media. It’s an entirely new publishing world. Today, some books experience some parts of this, but exceptionally few are treated to this full traditional process.

Publishers

Established mass-market publishers are failing, and they are merging to keep going. Traditional book publishers have lost their audience, which was bookstores, not readers. It’s very strange but New York book publishers do not have a database with the names and contacts of the people who buy their books. Instead, they sell to bookstores, which are disappearing. They have no direct contact with their readers; they don’t “own” their customers.

So when an author today pitches a book to an established publisher, the second question from the publishers after “what is the book about” is “do you have an audience?” Because they don’t have an audience. They need the author and creators to bring their own audiences. So, the number of followers an author has, and how engaged they are, becomes central to whether the publisher will be interested in your project.

Many of the key decisions in publishing today come down to whether you own your audience or not.

Agents

In the traditional realm, agents helped authors and they helped publishers. Publishers did not want to waste their time evaluating probable junk, so they would spend their limited time looking at what agents presented to them. In theory, the agent would know the editors' preferences and know what they were interested in, and the editor could trust them to bring good stuff.

For the author, agents had the relationship with editors, would know who might be interested in their project, and the agent would guarantee that the legal contracts were favorable to the author, and most importantly, negotiate good terms. For this work, agents would take 15% off the top of any and all money coming from the publisher. For most authors, that is a significant amount of money.

Are agents worth it? In the beginning of a career, yes. They are a great way to connect with editors and publishers who might like your stuff, and for many publishers, this is the only realistic way to reach them. Are they worth it later? Probably, depending on the author. I do not enjoy negotiating, and I have found that an agent will ask for, demand, and get far more money than I would have myself, so I am fine with their cut. Are they essential? Can you make it in the traditional publishing world without an agent? Yes, but it is an uphill climb.

The problem is, how do you find a good agent? I don’t know. I inherited a great agent very early in my career from the publisher I first worked for, and I have happily been with them since. If I had to start from scratch now, I’d ask friends with agents who make stuff like my stuff to recommend theirs.

In self-publishing, you avoid agents and so keep that 15%.

Advances

What an agent will ask for from a publisher is a bunch of money upfront, when the contract is signed. This is the advance. You pitch a book, and if the editors accept it, they give you a deadline of a year or so to produce it. The role of the advance is to pay you a wage until the book is released, after which it will begin earning royalties for you. Royalties might be something like 7-10% of the retail price per book. The money you get on signing is technically an “advance against royalties.” Meaning that whatever they pay you in advance is deducted from your royalties, so you won’t be paid anything further beyond the advance until and unless the earnings of your royalties exceed the advance.

It is very common for authors to not earn anything beyond their advance. The calculation for the amount of the advance goes roughly like this: Let’s say you earn $1 royalty for every book sold. The publishers estimate they can sell 30,000 copies in the first year, and so they offer you an advance against future royalties of $30,000, or one year’s worth of sales. Obviously, many other factors go into this equation, but to a first approximation, the most you will get for an advance is based on what kind of sales they expect immediately.

The rule of thumb for an author is that you should get the biggest possible advance you can (and this is how an agent can help) – even if this means you won’t earn out the advance. The reason is: the bigger the advance, the bigger commitment the publisher must make in promotion, publicity, and sales. They now have significant skin in your game. Publishers are stretched thin, and their limited sales resources tend to go where they have the most to lose. If an advance is skimpy, so will be the resources allotted to that book.

BTW, you should not have concerns about taking a larger advance than you ever earn out, because a publisher will earn out your advance long before you do. They make more money per book than you do, so their earn-out threshold comes much earlier than the author’s.

Thus one of the advantages of this traditional system – of going with a publisher – is that they bankroll your project. They reduce a bit of your risk. Likewise, that is the genius of Kickstarter and other crowdfunders for self-publishing: the presales bankroll your project, reducing risk. Crowdfunding becomes the bank.

Crowdfunding

I’ve written a whole essay on my 1,000 True Fans idea, simplified as thus: You don’t need a million fans to make self-publishing, or the self-creation of anything, work. If you own control of your audience – that is if you have a direct relationship with your customers individually, having their names and emails, and can communicate with them directly — then it is possible to have as few as a thousand true fans support you. True fans are described as superfans who will buy anything and everything you produce. If you can produce enough to sell your true fans $100 per year, you can make a living with 1,000 true fans. I go into this approach in greater detail in my essay first published in 2008 which you can read here.

Today there are many tools and platforms that cater to developing and maintaining your own audience. In addition to crowdfunders such as Indiegogo, Kickstarter, Backerkit, and dozens more, there are also tools for sustaining support with patrons, such as Patreon. Crowdfunders tend to be used at the launch of a project, while something like Patreon permits constant support, primarily for a creator rather than a particular project. These can be combined, of course. You could launch your self-published work with a Kickstarter, and then gather Patreon support for sequels, backstory and making-of material, future editions, or side projects. Periodic publications have subscriptions for ongoing support.

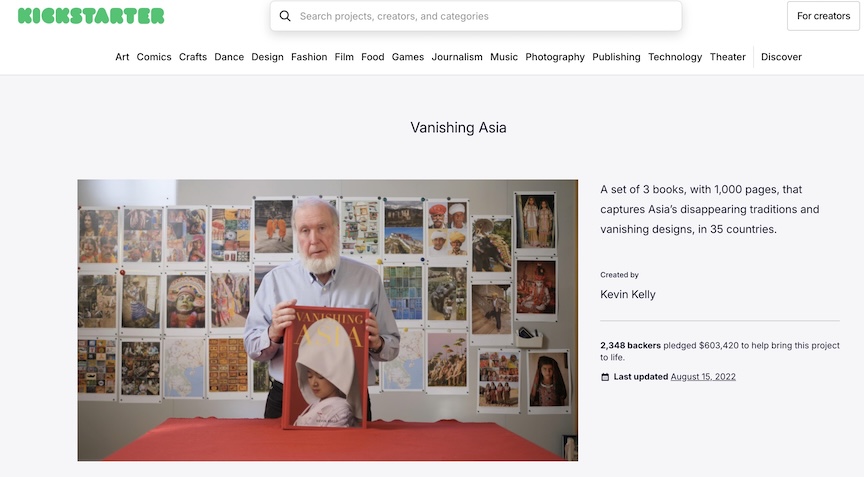

These days backers expect a video -- and other marketing bits – selling the book. Pre-sales for a crowdfunding campaign have become very sophisticated and require a lot of preparation. The Kickstarter for my Asia photobook was relatively simple and crude.

The chief advantages of crowdfunding are three, and they are significant: 1) You can get the funds before you create in order to support you while you create. 2) You keep all of the revenue (minus 3-5% for the platform), unlike an outside publisher. And 3) You own the audience, for future work.

The disadvantages are also three. 1) It is a huge amount of work. Most crowdsource campaigns go for 30 days and tending it for 30 days is a full-time job. 2) To be successful requires a different set of talents – marketing, sales, social engagement – other than what a creator may have. 3) You are responsible for making sure your fans actually get what they were promised. This "fulfillment" aspect of crowdfunding is often overlooked until the end, when it turns out to be the most difficult part of the process for many creators.

Production

Once upon a time, it was a huge deal to design and physically print a book (or press a music album, or deliver a reel of film). Today those processes can be done by amateurs with little experience. And often digital versions make creating, duplicating, and distribution even easier than ever.

There are three paths to production: traditional batch manufacturing; on-demand printing; digital publishing.

Batch Printing Presses

The traditional way of printing hardcover books still exists and it is a big business. A really first-rate printer will have different kinds of presses for different jobs – including the same fast digital printers as the on-demand printers. In fact, for some jobs, they will use these same digitally controlled ink-jet printers, just at a larger scale and speed. The chief advantages of classic printing on paper are three: you get scale, quality, color.

Pages from my first photobook, Asia Grace, published by Taschen, stacked up in their printing plant in Verona, Italy. In the old days before presses were completely computerized, the art director for the book (me) would be present during printing to oversee the many color tradeoffs each signature of pages needed.

Scale: Books printed in larger volume batches, or “runs,” can win huge discounts on the price per copy. A regular-sized hardcover book printed in Asia might only cost a few dollars to print, and a few more dollars for packing and shipping to your home. That’s a great deal if you list a book at $30. The higher the volume, the lower the price per unit. Printing outside of Asia is more expensive, but still worth considering – if you think you want a lot of copies, say more than 5,000 to start.

Quality: There is a resurgence in considering a well-crafted book as an art object. By leaning into its physicality – adding an embossed cover, heavy rag paper, deckled edges, glorious binding – the book can transcend its intangible counterpart on the Kindle. You as a publisher can make a book a unique custom size, or with magnificent die-cut covers for added zest and higher prices. Some self-published authors offer handsome bound book sets, or books hand-signed via tipped-in sheets, or super high-end limited editions, cradled in their own box. All these kinds of qualities require a collaborating printer somewhere.

Color: On-demand printing can do color, but not oversized, and not cheaply. In my experience, serious coffee-table visual books still need the hand-holding and economics of a printing plant. And I regret to say, that after many years of searching, I have not found a printer in the US capable of doing large full-color books at a reasonable price. You are most likely going to have to go to Asia, such as Vietnam, Indonesia, Singapore, India, or Turkey. China still has the best prices with the highest quality of color printing.

The disadvantages of having your book printed at a printer are #1: You have to house and store them somewhere. Either you have an available basement or garage, or you rent a place, or you hire a dropshipper, or you pay for a distribution giant like Ingram or Amazon to handle it for you. The full run of a book can take up more room than you might think when they are packaged up for shipping. My Vanishing Asia book set, financed on Kickstarter, printed in Turkey, filled up 4 shipping containers, each 40 feet long! That is a LOT of books to store.

Disadvantage #2 is that you need to pay the printer first, long before you sell the books. Not only is this a cash flow challenge, but you have to guess how many books you will sell before they are sold. (Having the pre-sale on a crowdfunding platform like Kickstarter is a big help in relieving that problem.) To get the best price you need to print a lot, but if you print a lot, you have a lot to pay for and to store if they should not sell.

On-demand

You can use free software to design your book and then send it to an on-demand printer to make 1 copy or 1,000 copies, printed one by one as each copy is sold. The copy does not exist till it is sold, so there are no books to bank, store, or ship. An on-demand regular softcover book would cost about $5 to make. It will be professional quality, indistinguishable from a trade book you might buy on Amazon, in part because many of the books from big-time publishers you buy on Amazon are actually printed on-demand using this same technology. (Big-time publishers are also printing on demand!) However, while the ink printing is first class, the bindings, paper quality, cover details won't be up to what you can get with the best modern presses. What you'll get is the good-enough printing contained within the average hardcover book.

The advantage to a creator (and to NY publishers) is that there is no inventory of unsold books to store or handle. You print the book when, and only when, it is sold. The disadvantage is that the cost of printing is more per book.

I can use four different services to print on-demand books. My preferred color and photo/art book printer is Blurb, for quality and ease of use. They keep up with the state-of-the-art color printing. You can design your book, export it as a PDF and have Blurb print it on-demand. Or you can use Blurb’s own web-based design program, or you can use a version of its software built into Adobe’s Lightroom, which is pretty standard for photographers. It’s very simple to go from photographs to a very designed book and then printed.

Sample pages from the various coffee table books I have had printed on demand from Blurb. Some of these books have editions of 2 copies; however the quality of the color printing is first class.

A second option for on-demand printing of standard books with black and white texts, as well as books with color illustration, is Lulu. Their photo/art books are a bit cheaper than Blurb. They are very competitive with standard text-based books. Most importantly, Lulu integrates with your own customer list, so you own your audience.

That is not true of the third option, which I also use a lot: Amazon. Amazon offers its print-on-demand service, called KDP, to anyone who wants it, with the added huge advantage that your book will be not only listed on Amazon immediately, but also delivered by Amazon’s magical logistical Prime operation. So potential fans can discover your work on Amazon and then have it delivered to them the next day for free. This is huge! But the huge and sometimes deal-killing disadvantage is that you do not know who your readers are, as you do with Lulu. Although Amazon makes it ridiculously easy to create and sell a book, with them you don’t own your audience. But in some cases, that is still worth it.

The fourth option is IngramSpark. I have little direct experience with this vendor, but others who do claim it is the best choice for text-based books aimed at libraries and bookstores. Indie bookstores are doing much better than chain bookstores and they usually avoid Amazon's distribution system, Ingram is their main vendor for getting books — as it is for libraries. In addition to getting your book into the Ingram distribution, IngramSpark offers the self-publisher more options for book sizes, paper and binding.

Because you can print as few as one copy of a book, I use these on-demand print services to manufacture prototype versions of a book to check for its sizing and feel. This small on-demand prototype of my book of advice was later published in a larger page size by Penguin/RandomHouse.

Digital

By far the easiest way to publish a book is to sell a digital copy of it. More authors should consider just publishing digital books. You still have to promote it, but you don’t have to print it, ship it, handle it, or store it. A commercial publisher might offer the author a royalty of 7% of the retail price which is say $2 per $30 book, so you may make just as much money per book selling it for $2 in digital. Creating digital books is a great way to start a publishing career. I have two friends who started publishing their science fiction stories as inexpensive digital short stories, which sold well, and then were later discovered by print publishers, made into printed books, and eventually turned into movies by Hollywood. And they still sell the digital versions!

You can sell an e-book – or even a chapter of a book – on Amazon's KDP. You can easily make a book for the Kindle. I've had some digital books up on Amazon KDP for the past ten years, and they continue to sell slowly, yet I have not had to do anything with them since they were uploaded. While the Kindle gives you a royalty of 70%, and accesses a large Amazon/Kindle audience, its downsides are that you don't own your audience, it demands exclusivity, and you must use their proprietary file format which removes any distinctive interior designs and prevents it from being read on other devices. There are dozens of other e-book readers and e-book platforms like Kobo, Apple Books, and Google Play Books who have different proprietary constraints. IngramSpark has an interesting hybrid program for e-book + on-demand printing.

These days a lot more people are comfortable reading a book in PDF form. I sell secure PDFs of some of my books on Gumroad, an easy-to-use web-based app, that collects the payment and sends the buyer an authorized copy. Gumroad works fine, does not charge much, and is super easy to set up; it is perfect for the low digital sales volumes I have.

The digital publishing world is fast-moving, and I don't have as much recent experience with e-books to feel confident in finer resolution recommendations.

Audiobooks

Another important digital format I neglected to mention in the first version of this piece is Audiobooks. For the past decade audiobooks has been the fastest growing format for books — the one sunny spot in a worried landscape. There are many readers who only audit books, and never read them. I don't have any experience in self-publishing audio books; all my mainstream publishers developed the audio versions with almost no input from me. But my friend and science fiction author Eliot Peper has self-published 9 audiobooks, sometimes hiring voice actors and, more recently, narrating them himself.

Currently the platform of choice for self-publishing audiobooks is ACX. ACX is a do-it-yourself platform, run by Audible, the major audiobook platform, and is also owned by Amazon. They are a full-service platform with sound quality tests, and a million narrators you could hire, and other tools to make the process easy. They take a hefty royalty of 40% and demand exclusivity, but your book is listed on Audible; for many readers that is the only place they will ever look for audiobooks. Alternatives such as Spotify are expanding into audiobooks which might make better deals with authors.

Distribution

Digital is easy, but increasingly, the "difficulties" of analog books have become an attraction. Some readers gravitate to the tactile pleasures of a well-made artifact and revel in the physical chore of turning pages. Sometimes the content of a book demands a bigger interface than a small screen can provide, so it needs the oversize release of a large printed page. Some appreciate the longevity of paper books, which never go obsolete and can be read for centuries without a power source or updates. Others are attracted to the serendipity of browsing a bookstore. And some folks value the limited scarcity of a printed volume.

But once words are printed on a page you have to ship them somehow. On-demand printers like Amazon KDP, Lulu or Blurb will directly ship the books to individual readers as they are ordered one by one. There is no inventory for you, and thus no work for the author. The ability of the on-demand publishers to handle long mailing lists varies and is a bit of work. Amazon has the best prices for shipping (zero) but the worst facility to mail to a list. They want readers to order from their own Amazon accounts; Amazon wants to control the audience. Blurb can only ship to customers who order on Blurb. Lulu lets you control your own fan list but charges a lot more to ship.

Cartons of my heavy oversized graphic novel, Silver Cord, pile up in my studio after being shipped in from China. I was unprepared for the chore of shipping the over sized books out to all the backers without damage.

Let’s say you want to go all in, you print the books yourself, and now you have to get them to your fans. Three options; From the printing plant, the books will be shipped by truck to either:

- Your garage. You purchase mailing envelopes or boxes and tape them up and mail them out. Plus: you can sign the books. Minus: tons of ongoing work, and not cheap to mail, even with Media Mail in the US. Shipping globally is a huge headache, and insanely expensive.

- A Drop Shipper, or what is today called a 3PL. For a fee, this kind of company will pick your book from your inventory in their warehouse, package it, and ship it out to your fan, or a bookstore. You give them either a mailing list or access to your orders on Shopify or Kickstarter. Plus: no grunt work, no inventory at home. Minus, not cheap either and they also charge a storage fee for holding your books, which could be there for years. Commercial dropshippers also favor large volume enterprises. I am currently trying out dropshipper eFulfillment that has low minimums and works with small-time operators like me.