The Singularity Is Always Near

[Translations: Japanese]

There’s a visceral sense we are experiencing a singularity-like event with computers and the world wide web. But the current concept of a singularity is not be the best explanation for the transformation in progress.

The singularity is a term borrowed from physics to describe a cataclysmic threshold in a black hole. In the canonical use, an object is pulled into the center gravity of a black hole it passes a point beyond which nothing about it, including information, can escape. In other words, although an object’s entry into a black hole is steady and knowable, once it passes this discrete point nothing whatever about its future can be known. This disruption on the way to infinity is called a singular event – a singularity.

Mathematician and science fiction author Vernor Vinge applied this metaphor to the acceleration of technological change. The power of computers has been increasing at an exponential rate with no end in sight, which led Vinge to an alarming picture. In Vinge’s analysis, at some point not too far away, innovations in computer power would enable us to design computers more intelligent than we are, and these smarter computers could design computers yet smarter than themselves, and so on, the loop of computers-making-newer-computers accelerating very quickly towards unimaginable levels of intelligence. This progress in IQ and power, when graphed, generates a rising curve which appears to approach the straight up limit of infinity. In mathematical terms it resembles the singularity of a black hole, because, as Vinge announced, it will be impossible to know anything beyond this threshold. If we make an AI which in turn makes a greater AI, ad infinitum, then their future is unknowable to us, just as our lives have been unfathomable to a slug. So the singularity became a black hole, an impenetrable veil hiding our future from us.

Ray Kurzweil, a legendary inventor and computer scientist, seized on this metaphor and applied it across a broad range of technological frontiers. He demonstrated that this kind of exponential acceleration is not unique to computer chips but is happening in most categories of innovation driven by information, in fields as diverse as genomics, telecommunications, and commerce. The technium itself is accelerating in its rate of change. Kurzweil found that if you make a very crude comparison between the processing power of neurons in human brains and the processing powers of transistors in computers, you could map out the point at which computer intelligence will exceed human intelligence, and thus predict when the cross-over singularity would happen. Kurzweil calculates the singularity will happen about 2040. That seems like tomorrow, which prompted Kurzweil to announce with great trumpets that the “Singularity is near.” In the meantime everything is racing to that point – beyond which it is impossible for us to imagine what happens.

Even though we cannot know what will be on the other side of the singularity, that is, what kind of world our super intelligent brains will provide us, Kurzweil and others believe that our human minds, at least, become immortal because we’ll be able to either download them, migrate them, or eternally repair them with our collective super intelligence. Our minds (that is ourselves) will continue on with or without our upgraded bodies. The singularity, then, becomes a portal or bridge to future. All you have to do is live long enough to make it through the singularity in 2040. If you make it till then, you’ll become immortal.

I’m not the first person to point out the many similarities between the Singularity and the Rapture. The parallels are so close that some critics call the singularity the Spike to hint at that decisive moment of fundamentalist Christian apocalypse. At the Rapture, when Jesus returns, all believers will suddenly be lifted out their ordinary lives and ushered directly into heavenly immortality without going through death. This singular event will produce repaired bodies, intact minds full of eternal wisdom, and is scheduled to happen “in the near future.” The hope is almost identical to the techno Rapture of the singularity.

There are so many assumptions built into the Kurzweilian version of singularity that it is worth trying to unravel them because while a lot about the singularity of technology is misleading, some aspects of the notion do capture the dynamic of technological change.

First, immortality is in no way ensured by a singularity of AI. For any number of reasons our “selves” may not be very portable, or new engineered eternal bodies may not be very appealing, or super intelligence alone may not be enough to solve the problem of overcoming bodily death quickly.

Second, intelligence may or may not be infinitely expandable from our present point. Because we can imagine a manufactured intelligence greater than ours, we think that we possess enough intelligence right now to pull off this trick of bootstrapping. In order to reach a singularity of ever-increasing AI we have to be smart enough not only to create a greater intelligence, but to also make one that is able to create the next level one. A chimp is hundreds of times smarter than an ant, but the greater intelligence of a chimp is not smart enough to make a mind smarter than itself. Not all intelligences are capable of bootstrapping intelligence. We might call a mind capable of imaging another type of intelligence but incapable of replicating itself a Type 1 mind. A Type 2 mind would be an intelligence capable of replicating itself (making artificial minds) but incapable of making one substantially smarter. A Type 3 mind would be capable of creating an intelligence sufficiently smart that it could make another generation even smarter. We assume our human minds are Type 3, but it remains an assumption. It is possible that we own Type 1 minds, or that greater intelligence may have to be evolved slowly rather than bootstrapped instantly in a singularity.

Third, the notion of a mathematical singularity is illusionary. Any chart of an exponential growth will show why. Like many of Kurzweil’s examples, an exponential can be plotted linearly so that the chart shows the growth taking off like a rocket. Or it can be plotted on a log-log graph, which has the exponential growth built into the graph’s axis, so the takeoff is a perfectly straight line. His website has scores of them all showing straight line exponential growth headed to towards a singularity. But ANY log-log graph of a function will show a singularity at Time 0, that is, now. If something is growing exponentially, the point at which it will appear to rise to infinity will always be “just about now.”

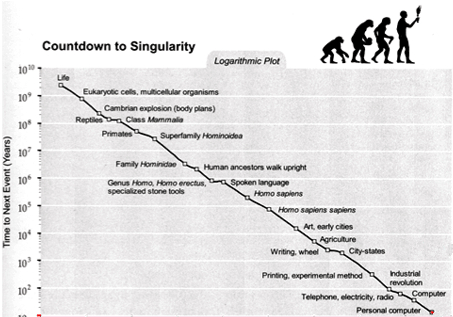

Look at this chart of the exponential rate at which major events occur in the world, called Countdown to Singularity . It displays a beautiful laser straight rush across millions of years of history.

But if you continue the curve to now instead of stopping 30 years ago it shows something strange. Kevin Drum, a fan and critic of Kurzweil who writes for the Washington Monthly extended this chart to the right now by adding the pink section in the graph above, instead of stopping 30 years ago.

Surprisingly it suggests the singularity is now. Even weirder it suggests that the view would have looked the same almost any time along the curve. If Benjamin Franklin (an early Kurzweil type) had mapped out the same graph in 1800, his graph too would have suggested that the singularity would be happening then, RIGHT NOW! The same would have happened at the invention of radio, or the appearance of cities, or at any point in history since – as the straight line indicates – the “curve” or rate is the same anywhere along the line.

Switching chart modes doesn’t help. If you define the singularity as the near-vertical asymptote you get when you plot an exponential progression on a linear chart, then you’ll get that infinite slope at any arbitrary end point along the exponential progression. That means that the singularity is “near” at any end point along the time line — as long as you are in exponential growth. The singularity is simply a phantom that will materialize anytime you observe exponential acceleration retrospectively. Since these charts correctly demonstrate that exponential growth extends back to the beginning of the cosmos, that means that for millions of years the singularity was just about to happen! In other words, the singularity is always near, has always been “near”, and will always be “near.”

For instance, if we broadened the definition of intelligence to include evolution (a type of learning), then we could say that intelligence has been bootstrapping itself all along, with smarter stuff making itself smarter, ad infinitum, and that there is no discontinuity or discreet points to map. Therefore in the end, the singularity has always been near, and will always be near.

Fourth, and most important, I think that technological transitions represented by the singularity are completely imperceptible from WITHIN the transition that is represented (inaccurately) by a singularity. A phase shift from one level to the next level is only visible from the perch of the new level — after arrival there. Compared to a neuron the mind is a singularity — it is invisible and unimaginable to the lower parts. But from the viewpoint of a neuron the movement from a few neurons to many neurons to alert mind will appear to be a slow continuous smooth journey of gathering neurons. There is no sense of disruption, of Rapture. The discontinuity can only be seen in retrospect.

Language is a singularity of sorts, as was writing. But the path to both of these was continuous and imperceptible to the acquirers. I am reminded of a great story a friend tells of some cavemen sitting around the campfire 100,000 years ago, chewing on the last bits of meat, chatting in guttural sounds. One of them says,

“Hey, you guys, we are TALKING!

“What do you mean TALKING? Are you finished that bone?

“I mean we are SPEAKING to each other! Using WORDS. Don’t you get it?

“You’ve been drinking that grape stuff again, haven’t you.”

“See we are doing it right now!”

“What?”

As the next level of organization kicks in, the current level is incapable of perceiving the new level, because that perception must take place at the new level. From within our emerging global cultural, the coming phase shift to another level is real, but it will be imperceptible to us during the transition. Sure, things will speed up, but that will only hide the real change, which is a change in the rules of the game. Therefore we can expect in the next hundred years that life will appear to be ordinary and not discontinuous, certainly not cataclysmic, all the while something new gathers, until slowly we recognize that we have acquired the tools to perceive that new tools are present – and have been for a while.

When I mentioned this to Esther Dyson, she reminded me that we have an experience close to the singularity every day. “It’s called waking up. Looking backwards, you can understand what happens, but in your dreams you are unaware that you could become awake….”

In a thousand years from now, all the 11-dimensional charts at that time will show that “the singularity is near.” Immortal beings and global consciousness and everything else we hope for in the future may be real and present but still, a linear-log curve in 3006 will show that a singularity approaches. The singularity is not a discreet event. It’s a continuum woven into the very warp of extropic systems. It is a traveling mirage that moves along with us, as life and the technium accelerate their evolution.

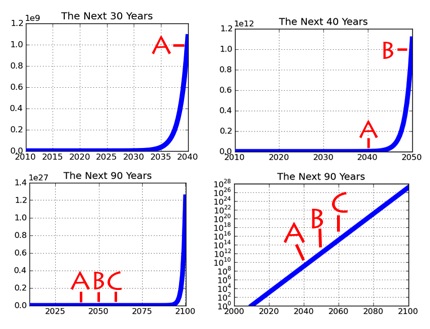

UPDATE: Philip Winston crafted a marvelous way of visualizing the inherent phantom nature of a technological singularity. In a post he calls The Singularity is Always Steep, he maps out the problem. I’ve combined his images into one picture here. In the first square (upper left) the curve of progress shows the vertical Singularity in 30 years. But if you keep the curve going another 10 years, that earlier point, oncer vertical, becomes horizontal, and a new vertical point appears. Likewise you can extend the curve ahead another ten years and then another, and all those former vertical Singularities sink into ordinaryness. The only remedy is to plot the point on a log curve(lower right box) which suddenly reveals the truth: Any point — in either the past, present, or future — along an exponential curve is a singularity. The Singularity is always near, always right now, and always in the past. In other words, it is meaningless.