The Landscape of Possible Intelligences

[Translations: Japanese]

In A Taxonomy of Minds I explore the varieties of intelligence which a greater-than-human intelligence might take. We could meet greater-than-human intelligences in an alien ET, or we can make synthetic ones. The one foundational assumption behind our making new minds ourselves is that we assume our mind is intelligent enough to make a new and different mind. Just because we are conscious does not mean we have the smarts to make consciousness ourselves. Whether (or when) AI is possible will ultimately depend on whether we are smart enough to make something smarter than ourselves. We assume that ants have not achieved this level. We also assume that as smart as chimpanzees are, chimps are not smart enough to make a mind smarter than a chimp, and so have not reached this threshold either. While some people assume humans can create a mind smarter than a human mind, humans may be at a level of intelligence that is below that threshold also. We simply don’t know where the threshold of bootstrapping intelligence is, nor where we are on this metric.

We can distinguish several categories of elementary minds in relation to bootstrapping:

1) A mind capable of imagining, or identifying a greater mind.

2) A mind capable of imaging but incapable of designing a greater mind.

3) A mind capable of designing a greater mind.

We fit the first criteria, but it is unclear whether we are of the second or third type of mind. There is also a fourth type, which follows the third:

4) A mind capable of generating a greater mind which in turn itself creates a greater mind, and so on.

This is an cascading, bootstrapping mind. Once a mind reaches this level, the recursive mind-enlargement can either keep going ad infinitum, or it might reach some limit. On the other hand, there may be more than one threshold in intelligence. Think of it as quantum levels. A mind may be able to make a mind smarter than itself, but the offspring mind may not be smart enough to make the next leap, and so gets stuck.

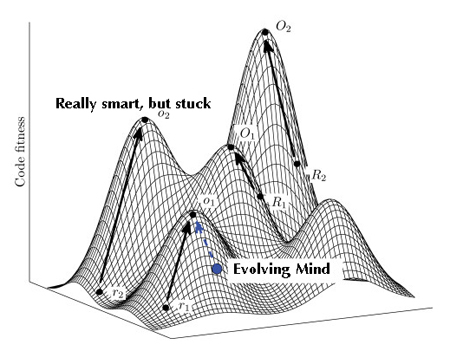

If we imagine the levels of intelligence as a ladder with unevenly spaced rungs, there may be jumps that some intelligences are not able to complete, or their derivatives are not able to jump. So a type 3 mind may be able to jump up four levels of bootstrapping intelligence, but not five. Since I don’t believe intelligence is linear (that is I believe intelligence grows in many dimensions), a better illustration may be to view the problem of bootstrapping super intelligence as navigating across a rugged evolutionary landscape.

In this type of graph higher means better adapted, more suitable in form. Different hills indicated different varieties of environments, and different types of forms. This particular chart represents the landscape of possible types of intelligences. Here the higher a mind goes on a hill, the more highly it is suited or perfected for that type of intelligence.

In a very rugged fitness landscape, the danger is getting stuck on local optima of form. Your organism perfects a type of mind that is optimal for a local condition, but this very perfection imprisons you locally and prevents you from reaching a greater optimal form elsewhere. In other words, evolving to a higher elevation is not a matter of sheer power of intelligence, but of type. There may be certain kinds of minds that are powerful and optimal for some kinds of thinking, but that are incapable overcoming hurdles to reach a different, higher peak. Certain types of minds may be able to keep getting more powerful in the direction they have been evolving, but incapable of shifting direction in order to reach a new power. In other words, they may be incapable of bootstrapping the next generation. Other kinds of minds may be not as optimal but more nimble.

At the moment we are totally ignorant of what the possibility landscape of intelligence is. We have not yet even mapped out animal intelligences, and we have no real examples of other self-conscious intelligences to map. Navigating through the evolutionary landscape may be very smooth, or it may be very rough and very dependent on the path an evolving mind takes.

Because we have experience with such a small set of mind types, we really have no idea whether there are limits to the varieties and levels of intelligence. While we can calculate the limits of computation (and folks like Seth Lloyd have done just that), I don’t think intelligence as we currently understand it is equivalent to computation. The internet as a whole is computationally larger than our brains, but not as intelligent in the way we crave. Some people, like Stephen Wolfram, believe there is only one type of computation, and that there is sort of one universal intelligence. I tend to think there will be millions and billions of types of minds.

Recently, in conversations with George Dyson, I realized there is a fifth type of elementary mind:

5) A mind incapable of designing a greater mind, but capable of creating a platform upon which greater mind emerges.

This type of mind cannot figure out how to birth an intelligence equal to itself, but it does figure out how to set up conditions of evolution so that a new mind emerges from the forces pushing it. Dyson and I believe this is what is happening with the web and Google. An intelligence is forming without an overt top-down designer. Right now that intelligence is rather dimwitted, but it continues to grow. Whether it continues to develop into something near human or greater-than-human remains to be seen. But if this embryonic smartness continued, it would represent a new way of making a mind. And of course, this indirect way of making something smarter than yourself could be used at any point in the evolutionary bootstrapping cycle of a mind. Perhaps the fourth of fifth generation of a mind may be incapable of designing the next generation but capable of designing a system in which it emerges.

We tend to think of intelligence as singular, but biologically this is unlikely. More likely intelligence is multiple, diverse, and fecund. In the long haul, the central question will concern the differences between the evolvability of these various intelligences. Which types are capable of bootstrapping? And are we one of those?