The Google Way of Science

[Translations: Japanese]

There’s a dawning sense that extremely large databases of information, starting in the petabyte level, could change how we learn things. The traditional way of doing science entails constructing a hypothesis to match observed data or to solicit new data. Here’s a bunch of observations; what theory explains the data sufficiently so that we can predict the next observation?

It may turn out that tremendously large volumes of data are sufficient to skip the theory part in order to make a predicted observation. Google was one of the first to notice this. For instance, take Google’s spell checker. When you misspell a word when googling, Google suggests the proper spelling. How does it know this? How does it predict the correctly spelled word? It is not because it has a theory of good spelling, or has mastered spelling rules. In fact Google knows nothing about spelling rules at all.

Instead Google operates a very large dataset of observations which show that for any given spelling of a word, x number of people say “yes” when asked if they meant to spell word “y.” Google’s spelling engine consists entirely of these datapoints, rather than any notion of what correct English spelling is. That is why the same system can correct spelling in any language.

In fact, Google uses the same philosophy of learning via massive data for their translation programs. They can translate from English to French, or German to Chinese by matching up huge datasets of humanly translated material. For instance, Google trained their French/English translation engine by feeding it Canadian documents which are often released in both English and French versions. The Googlers have no theory of language, especially of French, no AI translator. Instead they have zillions of datapoints which in aggregate link “this to that” from one language to another.

Once you have such a translation system tweaked, it can translate from any language to another. And the translation is pretty good. Not expert level, but enough to give you the gist. You can take a Chinese web page and at least get a sense of what it means in English. Yet, as Peter Norvig, head of research at Google, once boasted to me, “Not one person who worked on the Chinese translator spoke Chinese.” There was no theory of Chinese, no understanding. Just data. (If anyone ever wanted a disproof of Searle’s riddle of the Chinese Room, here it is.)

If you can learn how to spell without knowing anything about the rules or grammar of spelling, and if you can learn how to translate languages without having any theory or concepts about grammar of the languages you are translating, then what else can you learn without having a theory?

In a cover article in Wired this month Chris Anderson explores the idea that perhaps you could do science without having theories.

This is a world where massive amounts of data and applied mathematics replace every other tool that might be brought to bear. Out with every theory of human behavior, from linguistics to sociology. Forget taxonomy, ontology, and psychology. Who knows why people do what they do? The point is they do it, and we can track and measure it with unprecedented fidelity. With enough data, the numbers speak for themselves.

Petabytes allow us to say: “Correlation is enough.” We can stop looking for models. We can analyze the data without hypotheses about what it might show. We can throw the numbers into the biggest computing clusters the world has ever seen and let statistical algorithms find patterns where science cannot.

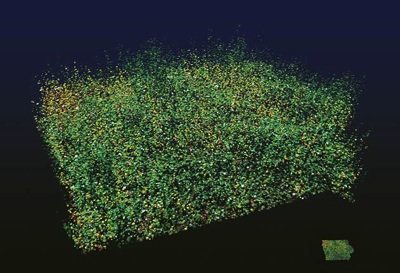

There may be something to this observation. Many sciences such as astronomy, physics, genomics, linguistics, and geology are generating extremely huge datasets and constant streams of data in the petabyte level today. They’ll be in the exabyte level in a decade. Using old fashioned “machine learning,” computers can extract patterns in this ocean of data that no human could ever possibly detect. These patterns are correlations. They may or may not be causative, but we can learn new things. Therefore they accomplish what science does, although not in the traditional manner.

What Anderson is suggesting is that sometimes enough correlations are sufficient. There is a good parallel in health. A lot of doctoring works on the correlative approach. The doctor may not ever find the actual cause of an ailment, or understand it if he/she did, but he/she can correctly predict the course and treat the symptom. But is this really science? You can get things done, but if you don’t have a model, is it something others can build on?

We don’t know yet. The technical term for this approach in science is Data Intensive Scalable Computation (DISC). Other terms are “Grid Datafarm Architecture” or “Petascale Data Intensive Computing.” The emphasis in these techniques is the data-intensive nature of computation, rather than on the computing cluster itself. The online industry calls this approach of investigation a type of “analytics.” Cloud computing companies like Google, IBM, and Yahoo(pdf), and some universities have been holding workshops on the topic. In essence these pioneers are trying to exploit cloud computing, or the OneMachine, for large-scale science. The current tools include massively parallel software platforms like MapReduce and Hadoop (see my earlier post), cheap storage, and gigantic clusters of data centers. So far, very few scientists outside of genomics are employing these new tools. The intent of the NSF’s Cluster Exploratory program is to match scientists owning large databased-driven observations with computer scientists who have access and expertise with cluster/cloud computing.

My guess is that this emerging method will be one additional tool in the evolution of the scientific method. It will not replace any current methods (sorry, no end of science!) but will compliment established theory-driven science. Let’s call this data intensive approach to problem solving Correlative Analytics. I think Chris squander a unique opportunity by titling his thesis “The End of Theory” because this is a negation, the absence of something. Rather it is the beginning of something, and this is when you have a chance to accelerate that birth by giving it a positive name. A non-negative name will also help clarify the thesis. I am suggesting Correlative Analytics rather than No Theory because I am not entirely sure that these correlative systems are model-free. I think there is an emergent, unconscious, implicit model embedded in the system that generates answers. If none of the English speakers working on Google’s Chinese Room have a theory of Chinese, we can still think of the Room as having a theory. The model may be beyond the perception and understanding of the creators of the system, and since it works it is not worth trying to uncover it. But it may still be there. It just operates at a level we don’t have access to.

But the models’ invisibility doesn’t matter because they work. It is not the end of theories, but the end of theories we understand. Writing in response to Chris Anderson’s article George Dyson says this much better:

For a long time we were stuck on the idea that the brain somehow contained a “model” of reality, and that AI would be achieved by constructing similar “models.” What’s a model? There are 2 requirements: 1) Something that works, and 2) Something we understand. Our large, distributed, petabyte-scale creations, whether GenBank or Google, are starting to grasp reality in ways that work just fine but that we don’t necessarily understand.

Just as we will eventually take the brain apart, neuron by neuron, and never find the model, we will discover that true AI came into existence without ever needing a coherent model or a theory of intelligence. Reality does the job just fine.

By any reasonable definition, the “Overmind” (or Kevin’s OneComputer, or whatever) is beginning to think, though this does not mean thinking the way we do, or on any scale that we can comprehend.

What Chris Anderson is hinting at is that Science (and some very successful business) will increasingly be done by people who are not only reading nature directly, but are figuring out ways to read the Overmind.

What George Dyson is suggesting is that this new method of doing science — gathering a zillion data points and then having the OneMachine calculate a correlative answer — can also be thought of as a method of communicating with a new kind of scientist, one who can create models at levels of abstraction (in the zillionics realm) beyond our own powers.

So far Correlative Analytics, or the Google Way of Science, has primarily been deployed in sociological realms, like language translation, or marketing. That’s where the zillionic data has been. All those zillions of data points generated by our collective life online. But as more of our observations and measurements of nature are captured 24/7, in real time, in increasing variety of sensors and probes, science too will enter the field of zillionics and be easily processed by the new tools of Correlative Analytics. In this part of science, we may get answers that work, but which we don’t understand. Is this partial understanding? Or a different kind of understanding?

Perhaps understanding and answers are overrated. “The problem with computers,” Pablo Picasso is rumored to have said, “is that they only give you answers.” These huge data-driven correlative systems will give us lots of answers — good answers — but that is all they will give us. That’s what the OneComputer does – gives us good answers. In the coming world of cloud computing perfectly good answers will become a commodity. The real value of the rest of science then becomes asking good questions.