Zillionics

[Translations: French, Japanese]

More is different.

Large quantities of something can transform the nature of those somethings. Or as Stalin said, “Quantity has its a quality all its own.” Computer scientist J. Storrs Hall, in “Beyond AI“, writes:

If there is enough of something, it is possible, indeed not unusual, for it to have properties not exhibited at all in small, isolated examples. The difference [can be] at least a factor of a trillion (10^12). There is no case in our experience where a difference of a factor of a trillion doesn’t make a qualitative, as opposed to merely a quantitative, difference. A trillion is essentially the difference in weight between a dust mite, too small to see and too light to feel, and an elephant. It’s the difference between fifty dollars and a year’s economic output for the entire human race. It’s the difference between the thickness of a business card and the distance from here to the moon.

I call this difference zillionics.

The machinery of duplication, particularly digital duplication, can amplify ordinary quantities of everyday things into orders of abundance previously unknown. Populations can go from 10 to a billion, trillion and zillion range.

Your personal library may expand from 10 books to an all digital 30 million books in the Google Library. Your music collection may go from 100 albums to all the music in the world. Your personal archive may go from a box of old letters to a petabyte of information over your lifetime. A business may need to manage hundreds of petabytes of information per year. Scientists may generate gigabytes of data per second. The number of files a government may need to track, secure, and analyze may reach into the quintrillions.

Zillionics is a new realm, and our new home. The scale of so many moving parts require new tools, new mathematics, new mind shifts.

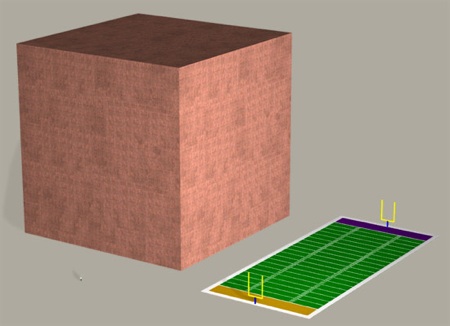

For scale, a trillion pennies next to a football field, from the Megapenny project.

When you reach the giga, peta, and exa orders of quantities, strange new powers emerge. You can do things at these scales that would have been impossible before. A zillion hyperlinks will give you information and behavior you could never expect from a hundred or thousand links. A trillion neurons give you a smartness a million won’t. A zillion data points will give you insight that a mere hundred thousand would never.

At the same time, the skills needed to manage zillionics are daunting. In this realm probabilities and statistics reign. Our human intuitions are unreliable.

I previously wrote:

We know from mathematics that systems containing very, very large numbers of parts behave significantly different from systems with fewer than a million parts. Zillionics is the state of supreme abundance, of parts in the many millions. The network economy promises zillions of parts, zillions of artifacts, zillions of documents, zillions of bots, zillions of network nodes, zillions of connections, and zillions of combinations. Zillionics is a realm much more at home in biology—where there have been zillions of genes and organisms for a long time—than in our recent manufactured world. Living systems know how to handle zillionics. Our own methods of dealing with zillionic plentitude will mimic biology. (From New Rules for the New Economy, 1998.)

The social web runs in the land of zillionics. Artificial intelligence, data mining, and virtual realities all require mastery of zillionics. As we ramp up the number of things we create, especially the ones we create collectively, we are also raising our media and culture into the realm of zillionics. The number of choices we have for music, art, images, words — anything! — is reaching the level of zillionics.

How do we prevent being paralyzed by zillionic choice (see the Paradox of Choice), or bullied by it? Is zillionics unlimited? This is a long tail so long, so wide, so deep, that it becomes something else again entirely.

More is different.