Was Moore’s Law Inevitable?

[Translations: Hebrew]

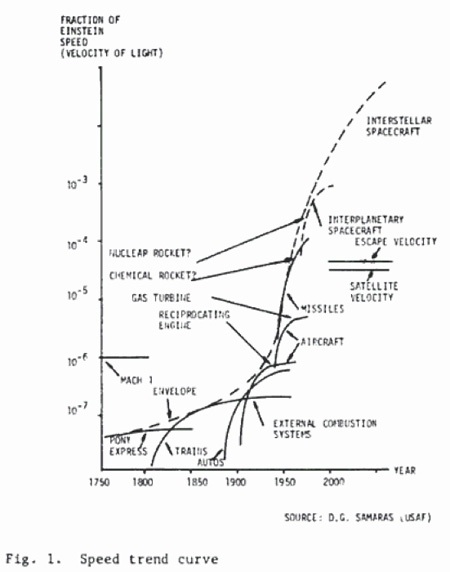

In the early 1950s the same thought occurred to many people at once: things are improving so fast and so regularly, there might be a pattern to the improvements. Maybe we could plot technological progress to date, then extrapolate the curves and see what the future holds. Among the first to do this systemically was the US Air Force. They needed a long-term schedule of what kinds of planes they should be funding, but aerospace was one of the fastest moving frontiers in technology. Obviously they would build the fastest planes possible, but since it took decades to design, approve, and then deliver a new type of plane, the generals thought it prudent to glimpse what futuristic technologies they should be funding.

So in 1953 the Air Force Office of Scientific Research plotted out the history of the fastest air vehicles. The Wright Brothers’ first flight reached 6.8 kph in 1903, and jumped to 60 kph two years later. The air speed record kept increasing a bit each year and in 1947 the fastest flight passed 1,000 kph in a Lockheed Shoot Star flown by Colonel Albert Boyd. The record was broken four times in 1953, ending with the F-100 Super Sabre doing 1, 215 kph. Things were moving fast. And everything was pointed towards space. According to Damien Broderick, the author of “The Spike”, in 1953 the Air Force…

Charted the curves and metacurves of speed. It told them something preposterous. They could not believe their eyes. The curve said they could have machines that attained orbital speed… within four years. And they could get their payload right out of Earth’s immediate gravity well just a little later. They could have satellites almost at once, the curve insinuated, and if they wished — if they wanted to spend the money, and do the research and the engineering — they could go to the Moon quite soon after that.

It is important to remember that in 1953 none of the technology for these futuristic journeys existed. No one knew how to do go that fast and survive. Even the most optimistic die-hard visionaries did not expect a lunar landing any sooner than the proverbial “Year 2000.” The only voice telling them they could do it was a curve on a piece of paper. But the curve was right. Just not politically correct. In 1957 the USSR launched Sputnik, right on schedule. Then US rockets zipped to the Moon 12 years later. As Brokderick notes, humans arrived on the Moon “close to a third of century sooner than loony space travel buffs like Arthur C Clarke had expected it to occur.”

What did the curve know that Arthur C Clarke did not? How did it account for the secretive efforts of the Russians as well as dozens of teams around the world? Was the curve a self-fulfilling prophesy, or a revelation of a trend rooted deep in the nature of the technium? The answer may lay in the many other trends plotted since then. The most famous of them all is the trend known as Moore’s Law. In brief, Moore’s Law predicts that computing chips shrink by half in size and cost every 18-24 months. For the past 50 years it has been astoundingly correct.

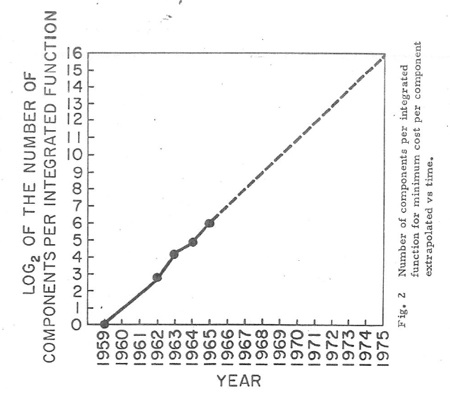

This trend was first noticed in 1960 by Doug Englebart, a researcher at SRI in Palo Alto, California, who would later go on to invent the “windows and mouse” interface that is now ubiquitous on most computers. When he first started as an engineer Englebart worked in the aerospace industry testing airplane models in wind tunnels where he learned how systematic scaling down led to all kinds of benefits and unexpected consequences. The smaller the model, the easier to fly. Englebart imagined how the benefits of scaling down, or as he called it “similitude,” might transfer to a new invention SRI was tracking — multiple transistors on one integrated silicon chip. Perhaps as they were made smaller, circuits too might deliver a similar kind of similitude magic: The smaller a chip, the better. Englebart presented his ideas on similitude to an audience of engineers at the 1960 Solid State Circuits Conference that included Gordon Moore, a researcher at Fairchild Semiconductor.

In the following years Moore began tracking the actual statistics of the earliest prototype chips. By 1964 he had enough data points to extrapolate the slope of the curve so far. But as Moore recalls,

I was not alone in making projections. At a conference in New York City that same year [1964], the IEEE convened a panel of executives from leading semiconductor companies: Texas Instruments, Motorola, Fairchild, General Electric, Zenith, and Westinghouse. Several of the panelists made predictions about the semiconductor industry.

Patrick Haggerty of Texas Instruments, looking approximately ten years out, forecast that the industry would produce 750 million logic gates a year. I thought that was perceptive but a huge number, and puzzled, “Could we actually get to something like that?” Harry Knowles from Westinghouse, who was considered the wild man of the group, said, “We’re going to get 250,000 logic gates on a single wafer.” At the time, my colleagues and I at Fairchild were struggling to produce just a handful. We thought Knowles’s prediction was ridiculous. C. Lester Hogan of Motorola looked at expenses and said, “The cost of a fully processed wafer will be $10.”

When you combine these predictions, they make a forecast for the entire semiconductor Industry [for 1974]. If Haggerty were on target, the industry would produce 750 million logic gates a year. Using Knowles’s “wild” figure of 250,000 logic gates per wafer meant that the industry would only use 3,000 wafers for this total output. If Hogan was correct, and the cost per processed wafer was $10, that would mean that the total manufacturing cost to produce the yearly output of the semiconductor industry would be $30,000! Somebody was wrong.

As it turned out, the person who was the “most wrong” was Haggerty, the panelist I considered the most perceptive. His prediction of the number of logic gates that would be used turned out to be a ridiculously large underestimation. On the other hand, the industry actually achieved what Knowles foresaw, while I had labeled his suggestion as the ridiculous one. Even Hogan’s forecast of $10 for a processed wafer was close to the mark, if you allow for inflation and make a cost-per-square-centimeter calculation.

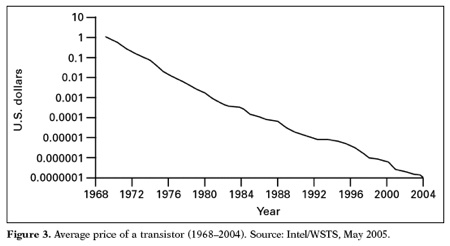

The trends were telling them something no one else was, impossible as it seemed. Moore kept adding data points as the semiconductor industry grew. He was tracking all kinds of parameters — number of transistors made, cost per transistor, number of pins, logic speed, and components per wafer. But one of them was cohering into a nice curve: The number of components per chip. In 1965, at the invitation from the editor of the trade journal Electronics, Moore wrote a piece on “the future of microelectronics.” In this short article he pointed out the curve of progress in chip fabrication is increasing by a exponential power every year. As Moore noted in his internal memo to the Fairchild patent officers, he took current trend and “extrapolated into the wild blue yonder.” But in fact, how far would it really go?

Moore hooked up with Carver Mead, a fellow Caltech alumnus. Mead was an electrical engineer and early transistor expert. In 1967 Moore asked Mead what kind of theoretical limits were in store for microelectronic miniaturization. Mead had no idea but as he did his calculations he made an amazing discovery: The efficiency of the chip would increase by the cube of the scale’s reduction. The benefits from shrinking were “exponential.” Microelectronics would not only become cheaper, they would also become better. As Moore puts it “By making things smaller, everything gets better simultaneously. There is little need for tradeoffs. The speed of our products goes up, the power consumption goes down, system reliability improves by leaps and bounds, but especially the cost of doing things drops as a result of the technology.” Carver Mead was so caught up in Moore’s curves that he began to formalize them with physics equations and he named the trend Moore’s Law. He became an evangelist for the idea, traveling to electronics companies, the military, and academics preaching that the future of electronics lay in ever-smaller blocks of silicon, and trying to “convince people that it really was possible to scale devices and get better performance and lower power” — and that there was no end in sight for this trend. “Every time I’d go out on the road,” Mead recalls, “I’d come to Gordon and get a new version of his plot.”

Today when we stare at the plot of Moore’s Law we can spot several striking characteristics of its 50 year run. The first surprise is that this is a picture of acceleration. The straight line descending slope of the “curve” indicates a ten fold increase in goodness for every tick on vertical log axis. Silicon computation is not simply getting better, but getting better faster. Relentless acceleration for five decades is rare in biology and unknown in the technium before this century. The explosion of good stuff is revealed in a line. So this graph is as much about the phenomenon of cultural acceleration as about silicon chips. In fact Moore’s Law has come to represent the principle of an accelerating future which underpins our expectations of the technium: the world of the made gets better, faster.

Secondly, even a cursory glance reveals the astounding regularity of Moore’s line. From the earliest points its progress has been eerily mechanical. Without interruption for 50 years, chips improve exponentially at the same speed of acceleration, neither more nor less. It could not be more straight if it had been engineered by a technological tyrant. Yet, we are to believe that this strict nonwavering trajectory came about via the chaos of the global marketplace and uncoordinated ruthless scientific competition. The line is so straight and unambiguous that it seems curious anyone would need convincing by Moore and Mead to “believe” in it. The question of faith lies in whether one believes the force of this “law” lies within the technology itself, or in a self-fulfilling social prophecy. Is Moore’s law inevitable, a direction pushed forward by the nature of matter and computation, and independent of the society it was born into, or is it an artifact of self-organized scientific and economic ambition?

Moore and Mead themselves believe the latter. Writing in 2005, on the 40th anniversary of his law, Moore says, “Moore’s law is really about economics.” Carver Mead made it clearer yet: Moore’s Law, he says, “is really about people’s belief system, it’s not a law of physics, it’s about human belief, and when people believe in something, they’ll put energy behind it to make it come to pass.” In case that was not clear enough he spells it out further:

After [it] happened long enough, people begin to talk about it in retrospect, and in retrospect it’s really a curve that goes through some points and so it looks like a physical law and people talk about it that way. But actually if you’re living it, which I am, then it doesn’t feel like a physical law. It’s really a thing about human activity, it’s about vision, it’s about what you’re allowed to believe. Because people are really limited by their beliefs, they limit themselves by what they allow themselves to believe what is possible.

Finally, in a another reference, Mead adds : “Permission to believe that [the Law] will keep going,” is what keeps the Law going. Moore agrees in a 1996 article: “More than anything, once something like this gets established, it becomes more or less a self-fulfilling prophecy. The Semiconductor Industry Association puts out a technology road map, which continues this [generational improvement] every three years. Everyone in the industry recognizes that if you don’t stay on essentially that curve they will fall behind. So it sort of drives itself.”

The ” technology road map” produced by Semiconductor Industry Association in the 1990s was a major tool in cementing the role of Moore’s law in chips and society. According to David Brock, author of Understanding Moore’s Law, the SIA road map “transformed Moore’s law from a prediction to a self-fulfilling prophecy. It spelled out what needed to be accomplished, and when.” A major factor in semiconductor manufacturing process are the photoresist masks which craft the thin etched conducting wires on a chip. The masks have to get smaller in order for the chip to get smaller. Elsa Reichmanis is the foremost photoresist technical guru in Silicon Valley. She says, “Advances in the [process] technology today are largely driven by the Semiconductor Industry Association.” Raj Gupta, a materials scientist and CEO of Rohm and Haas, declares “They” — the SIA road map — “say what performance they need [for new electronic materials], and by which date.” Andrew Odlyzko from AT&T Bell Laboratories concurs: “Management is *not* telling a researcher, ‘You are the best we could find, here are the tools, please go off and find something that will let us leapfrog the competition.’ Instead, the attitude is, ‘Either you and your 999 colleagues double the performance of our microprocessors in the next 18 months, to keep up with the competition, or you are fired.'” Gordon Moore reiterated the importance of SIA in a 2005 interview with Charlie Rose: “the Semiconductor Industry Association put out a roadmap for the technology for the industry that took into account these exponential growths to see what research had to be done to make sure we could stay on that curve. So it’s kind of become a self-fulfilling prophecy.”

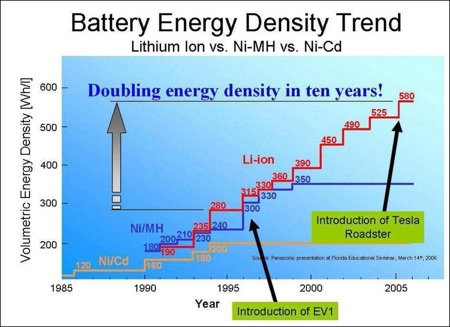

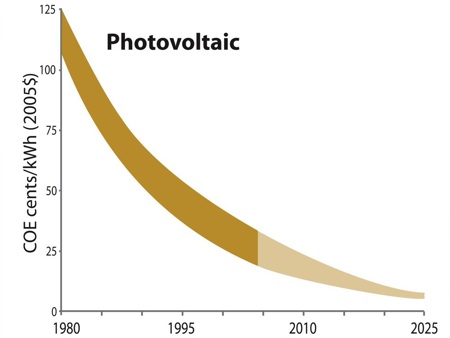

Clearly, expectations of future progress guide current investments. The inexorable curve of Moore’s Law helps focus money and intelligence on very specific goals — keeping up with the Law. The only problem with accepting these self-constructed goals as the source of such regular progress is that other technologies which might benefit from the same belief do not show the same zooming curve. We witness steady, quantifiable progress in other solid state technologies such as solar photovoltaic panels — which are also made of silicon. These have been sinking in performance price for two decades, but not exponentially. Likewise the power density of batteries has been increasing steadily for two decades, not again, no where near the rate of computer chips.

Why don’t we see Moore’s Law type of growth in the performance of solar cells if this is simply a matter of believing in a self-fulfilling prophecy? Surely, such an acceleration would be ideal for investors and consumers. Why doesn’t everybody simply clap for Tinkerbelle to live, to *really* believe, and then the hoped for self-made fairy will kick in, and solar cells will double in efficiency and halve in cost every two years? That kind of consensual faith would generate billions of dollars. It would easy to find entrepreneurs eager to genuinely believe in the prophecy. The usual argument applied against this challenge is that solar chips and batteries are governed primarily by chemical processes, which chips are not. As one expert put the failure of exponential growth in batteries: “This is because battery technology is a prisoner of physics, the periodic table, manufacturing technology and economics.” That’s plain wrong. Manufacturing silicon integrated chips is an intensely chemical achievement, as much a prisoner of physics, the periodic table and manufacturing as batteries. Mead admits this: “It’s a chemical process that makes integrated circuits, through and through.” In fact the main technical innovation of Silicon Valley chip fabrication was to employ the chemical industry to make electronics instead of chemicals. Solar and batteries share the same chemical science as chips.

So what is the curve of Moore’s law telling us that expert insiders don’t see? That this steady acceleration is more than an agreement. It originates within the technology. There are other technologies, also solid state material science, that exhibit a steady curve of progress, and just like Moore’s Law, their progress *is* exponential. They too seem to obey a rough law of remarkably steady exponential improvement.

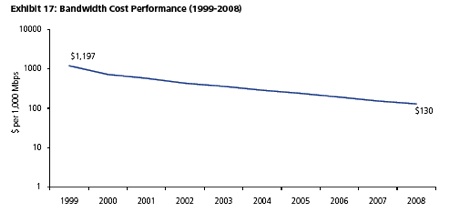

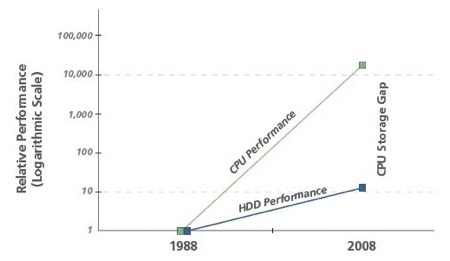

Consider the recent history (for the last 10-15 years) of the cost per performance of communication bandwidth, and digital storage.

The picture of their exponential growth parallels the integrated circuit in every way except their slope — the rate at which they are speeding up. Why is the doubling period in one technology eight years versus two? Isn’t the same finance system and expectations underpinning them?

Except for the slope, these graphs are so similar, in fact, that it is fair to ask whether these curves are just reflections of Moore’s Law. Telephones are heavily computerized and storage discs are organs of computers. Since progress in speed and cheapness of bandwidth, and storage capacity, relies directly and indirectly on accelerating computing power, it may be impossible to untangle the destiny of bandwidth and storage from computer chips. Perhaps the curves of bandwidth and storage are simply derivatives of the one uber Law? Without Moore’s Law ticking beneath them, would they even remain solvent?

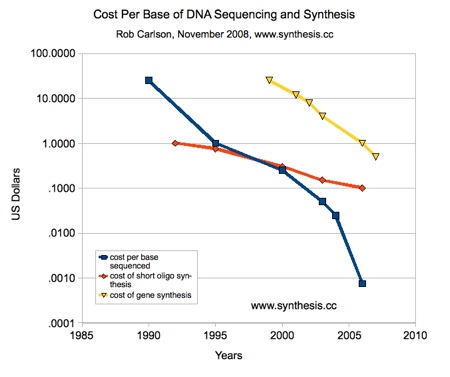

Consider another encapsulation of accelerating progress. For a decade or so biophysicist Rob Carlson has been tabulating progress in DNA sequencing and synthesis. Graphed similarly to Moore’s Law (above), in cost performance per base pair, this technology too displays a steady drop when plotted on a log axis. I asked Carlson how much of this gain is due to Moore’s Law. If computers did not get better, faster, cheaper each year, would DNA sequencing and synthesis continue to accelerate? Carlson replied:

Most of the fall in costs of sequencing and synthesis have to do with parallelization, new methods, and falling costs for reagents. Moore’s law must have had some effect through cheap hardware that enables desktop CAD, but that is fairly tangential. If Moore’s Law stopped, I don’t think it would have much effect. The one area it might affect is processing the raw sequence information into something comprehensible by humans. Crunching the data of DNA is at least as expensive as getting the sequence of the physical DNA.

Larry Roberts, the principal architect of the ARPANET, the early internet, keeps detailed stats on communication improvements. He has noticed that communication technology in general also exhibits a Moore’s Law-like rise in quality. Might progress in wires also be correlated to progress in chips? Roberts says that the performance of communication technology “is strongly influenced by and very similar to Moore’s Law but not identical as might be expected.”

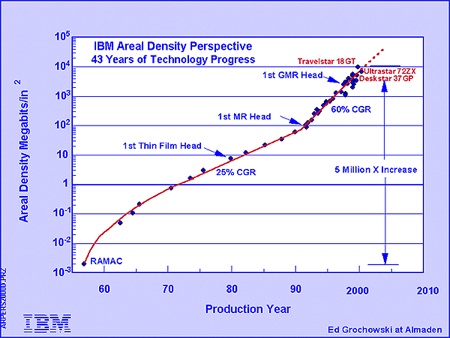

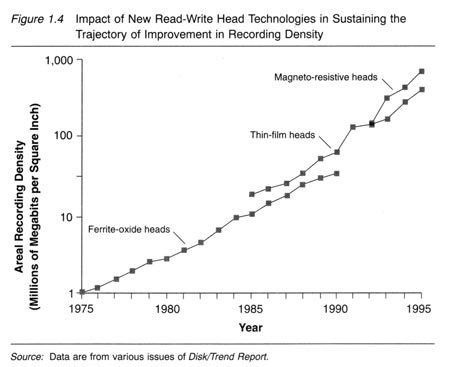

In the inner-circle of the tech industry the fast-paced drop in prices for magnetic storage is called Kryder’s Law. It’s the Moore’s Law for computer storage and is named after Mark Kryder the chief technical officer of Seagate, a major manufacturer of hard disks. Kryder’s Law says that the cost/performance of hard disks is increasing exponentially at a steady rate of 40% per year. I asked Kryder the same question: is Kryders’ Law dependent upon Moore’s Law. If computers did not get better, cheaper every year, would storage continue to do so? Kryder responded: “This is no direct relationship between Moore’s law and Kryder’s law. The physics and fabrication processes are different for the semiconductor devices and magnetic storage. Hence, it is quite possible that semiconductor scaling could stop while scaling of disk drives continues. In fact, I believe that Flash [silicon chip-based storage such as memory cards and thumb drives] will hit a barrier well before hard drives do.” Of course, if computers were to cease getting more powerful, the need for extra storage or faster communications would also slow down. So indirect market forces entwine the Laws, but only to a secondary degree. Andrew Odlyzko, who now studies the growth of internet at the University of Minnesota, says, “I would say Moore’s laws in their various disciplines are highly correlated and synergistic, drawing on the same pool of basic science and technology. It is hard (but not impossible) to imagine that if improvements in transistor density ceased, then photonics or wireless could progress for long.”

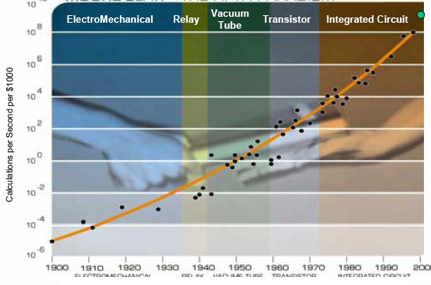

While expectations can certainly guide technological progress, consistent law-like improvement must be more than self-fulfilling prophesy. For one thing, this obedience to a curve often begins long before anyone notices there is a law, and way before anyone would be able to influence it. The exponential growth of magnetic storage began in 1956, almost a whole decade before Moore formulated his law for semiconductors and 50 years before Kryder formulized its existence. Rob Carlson says, “When I first published the DNA exponential curves, I got reviewers claiming that they were unaware of any evidence that sequencing costs were falling exponentially. In this way the trends were operative even when people disbelieved it.” Ray Kurzweil dug into the archives to show that something like Moore’s Law had it origins as far back as 1900, long before electronic computers existed, and of course long before the path could have been constructed by self-fulfillment. Kurzweil estimated the number of “calculations per second per $1,000” performed by turn-of-the-century analog machines, by mechanical calculators, and later by the first vacuum tube computers and extended the same calculation to modern semiconductor chips. He established that this ratio increased exponentially for the past 109 years. More importantly, the curve (let’s call it Kurzweil’s Law) transects five different technological species of computation: electromechanical, relay, vacuum tube, transistors and integrated circuits. An unobserved constant operating in five distinct paradigms of technology for over a century must be more than an industry road map. It suggest that the nature of these ratios are baked deep into the fabric of the technium.

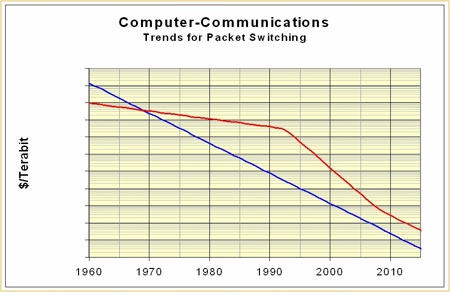

But in every contemporary case — DNA sequencing, magnetic storage, semiconductors, bandwidth, pixel density — once a fixed curve is revealed, scientists, investors, marketing, and journalists all grab hold of it and use it to guide experiments, investments, schedules and publicity. The map becomes the territory. There is no doubt curves are used as tools, and that they can sway the rate of progress. Moore tells one story: “When the industry fully recognized that we were truly on a pace of a new-process-technology-generation-every-three-years, we started to shift to a new-generation-every-two-years to get a bit ahead of the competition. We changed the slope.” For three and a half decades, from 1956 to 1991, IBM set the pace on improvements in the density of hard disk drives to about 25% per year. That’s just below the rate of semiconductor progress. IBM owned the patents and this rate allowed them to comfortably maintain high profit margins. But in the early 1980s, many competitors sprang up making smaller disks which had lower densities. But their densities were improving must faster than IBM’s schedule. So in 1990, IBM changed the slope. They mandated that henceforth their improvement would be 60% a year. This spurred an escalation of R&D investment, faster growth by the competitors, and more R&D by IBM so that by the late 1990s onward, the slope of growth increased to more than 100% per year. The slope of progress can be changed by pouring money down it. Mark Kryder says, “My guess is that you could double the density growth rate with something less than the double the R&D dollars.” The slope can also be changed by regulations. Larry Roberts offers this evidence for the effects of the US Telecommunications Act of 1993. “From before 1960 until de-regulation about 1993, the cost per terabit of communications [red line in the graph below; the blue is Moore’s Law] dropped very slowly, halving every 79 months. Then, once fiber was in place, DWDM and free enterprise took the market price down fast, halving every 12 months.”

Since the rate of these explosions of innovation can be varied to some degree by applying money or laws, their trend lines cannot be fully inherent in the material itself. At the same time, since these curves begin and advance independent of our awareness, and do not waver from a straight line under enormous competition and investment pressures, their course must in some way be bound to the materials.

If you scour the technium for examples of enduring exponential progress, you’ll find most candidates within fields related to material science. For instance the maximum rotational speed of an electric motor is not following an exponential curve. Nor is the maximum miles-per-gallon performance of an automobile engine. In fact most technical progress is not exponential, nor steady. Even most progress in material science is not exponential. We are not exponentially increasing the hardness of steel. Nor are we exponentially increasing the percentage yield of say, sulfuric acid, or petroleum distillates, from their precursors.

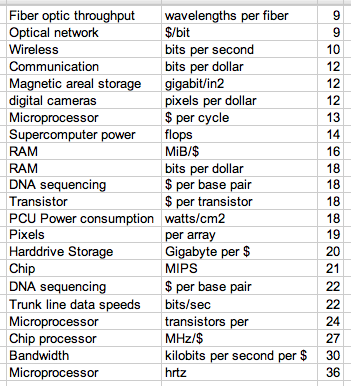

I gathered as many examples of current exponential progress as I could find. I was not seeking examples where the total quantity produced (watts, kilometers, bits, basepairs, traffic, etc) were rising exponentially since these quantities are skewed by our rising populations. More people use more stuff, even if it is not improving. Rather I looked for examples that showed performance ratios (such as pounds per inch, illumination per dollar) steadily increasing if not accelerating. Here is a set of quickly found examples, and the rate at which their performance is doubling. (This will display as halving the time.)

Doubling Times of Various Technological Performance in Months

The first thing to notice is that all these examples demonstrate the effects of scaling down, or working with the small. In this microcosmic realm energy is not very important. We don’t see exponential improvement in efforts to scale up, to keep getting bigger, skyscrapers and space stations. Airplanes aren’t getting bigger, flying faster, and more fuel efficient at an exponential rate. Gordon Moore jokes that if the technology of air travel experienced the same kind of progress as Intel chips, a modern day commercial aircraft would cost $500, circle the earth in 20 minutes, and only use five gallons of fuel for the trip. However, the plane would only be the size of a shoebox! We don’t see a Moore’s Law-type of progress at work while scaling up because energy needs scale up just as fast, and energy is a major limited constraint, unlike information. So our entire new economy is built around technologies that scale down well — photons, electrons, bits, pixels, frequencies, and genes. As these inventions miniaturize, they reach closer to bare atoms, raw bits, and the essence of matter and information. And so the fixed and inevitable path of their progress derives from this elemental essence.

The second thing to notice about this set of examples is the narrow range of slopes, or doubling time (in months). The particular power being optimized in these technologies is doubling between 8 and 30 months. Everyone of them is getting twice as better every year or two no matter the technology. What’s up with that? Engineer Mark Kryder’s explanation is that this “twice as better every two years” is an artifact of corporate structure where most of these inventions happen. It just takes 1-2 years of calendar time to conceive, design, prototype, test, manufacture and market a new improvement, and while a 5- or 10-fold increase is very difficult to achieve, almost any engineer can deliver a factor of two. Voila! Twice as better every two years. Engineers unleashed equals Moore’s Law.

But, as mentioned earlier, we see engineers unleashed in other departments of the technium without the appearance of exponential growth. And in fact not every aspect of semiconductor extrapolation resolves into a handy “law.” Moore recalls that in a 1975 speech he forecasted the expected growth of other attributes of silicon chips “just to demonstrate how ridiculous it is to extrapolate exponentials.” Extrapolating the maximum size of the wafer of silicon used to grow the chips (which was increasing as fast of the number of components) he calculated would yield a nearly 2-meter (6-foot) diameter crystal by 2000, which just seemed unlikely. That did not happen; they reached 300 mm (1 foot).

And as small as those differences in slopes are, say, between the 21-month doubling increase in power of a CPU processor, and the 16-month doubling increase in density of RAM storage, that gap is significant. Curiously, the difference between two exponential curves is itself an exponential growth. That means that over time, the performance of two technologies under that same financial regime, the same engineering society, the same technium, are diverging at an exponential rate. Clearly, this ever widening gap is due to an intrinsic quality of the technologies.

Should we ever arrive on other inhabited worlds in our galaxy, we should expect some of them to have reached the stage of microelectronics in their own technium. Once they discover the application of binary logic to microcircuits, they too will experience a version of Moore’s Law. The lessons of the microcosm will play out its inevitable course: as circuits get smaller, they get faster, more accurate, and cheaper. Their alien computers will quickly get better and more affordable at once, which in turn will propel innumerable other technological explosions to their great delight. Whenever it begins the steady acceleration of progress in solid-state computation should last for at least 25 doublings (what we’ve experienced so far), or a 33 million-fold increase in value. But while Moore’s Law is inevitable in its progression, its slope is not.

The slant of increase in a particular world may indeed be a matter of macro-economics. Here Moore and Mead may be correct: the slope of the Law rests on economics. Whether computing power doubles every month or every decade will depend on many factors of that particular society: population size, volume of the economy, velocity of money, evolution of financial instruments. The constant speed of discovery might hinge in part on the total available pool of engineers, whether it is 10 thousand versus 10 million. A faster planetary velocity of money may permit a faster doubling period. All these economic factors combine to produce a fixed constant for that world at that phase. If Moore’s Law turned out to be a universal fixture in the computational phase of civilization, this fixed constant might even be used as a classification marker. Hypothetical civilizations are currently classified by their energy use. The Russian astronomer Nikolai Kardashev specified that a class I civilization would leverage its home planet’s energy, a class II its star’s, and class III civilization its galaxy’s energy. In a Moore’s Slope scheme, a class I civilization would exhibit a chip-power doubling rate measured in “days,” while a class II civilization would show doublings measured in days squared, and class III in days cubed, and so on.

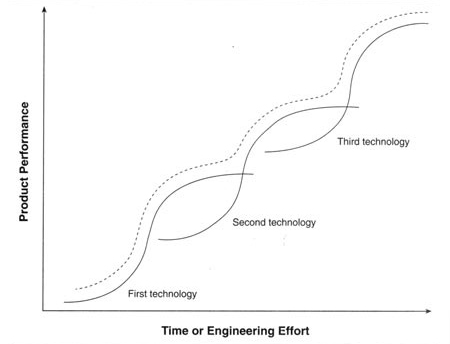

In any case, at some point on our planet, or any planet, the curve will plateau out. Moore’s law will not continue forever. Any specific exponential growth will inevitably smooth out into a typical S-shaped curve. This is the archetypical pattern of growth: after a slow ramp up, gains takeoff straight up like a rocket, and then after a long run level out slowly. Back in 1830 only 37 kilometers of railroad track had been laid in the US. That count doubled in the next ten years, and then doubled in the decade after that, and kept doubling every decade for 60 years. In 1890 any reasonable railroad buff would have predicted that the US would have hundreds of millions of kilometers of railroad by a hundred years later. There would be railroad to everyone’s house. Instead there were fewer than 400,000 kilometers. However, Americans did not cease to be mobile. We merely shifted our mobility and transportation to other species of invention. We built automobile highways, and airports. The miles we travel keep expanding, but the exponential growth of that particular technology peaked and plateau.

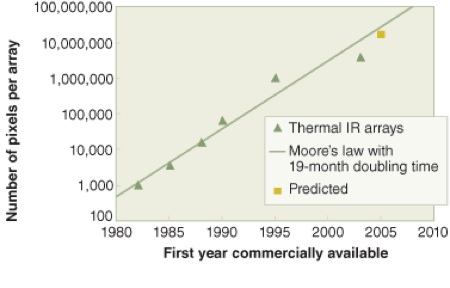

Much of the churn in the technium is due to our tendency to shift what we care about. Mastering one technology engenders new technological desires. A recent example: The first digital cameras had very rough picture resolution. Then scientists began cramming more and more pixels onto one sensor to increase photo quality. Before they knew it, the number of pixels possible per array was on an exponential curve, heading into megapixel territory and beyond. But after a decade of acceleration, consumers shrugged off the increasing number of pixels; the current resolution was sufficient. Their concern shifted to the speed of the pixel sensors, or the response in low light — things no one cared about before. So a new metric is born, and a new curve started, and the exponential curve of ever-more pixels per array will gradually abate.

Moore’s Law is headed to a similar fate. When, no one knows. “Moore’s Law, which has held as the benchmark for IC scaling for more than 40 years, will cease to drive semiconductor manufacturing after 2014,” Len Jelinek, the director of a major semiconductor manufacturer, claimed in 2009. Carl Anderson, an IBM Fellow, announced at an industry conference in April, 2009 that the end of Moore’s Law was at hand: “There was exponential growth in the railroad industry in the 1800s; there was exponential growth in the automobile industry in the 1930s and 1940s; and there was exponential growth in the performance of aircraft until they reached the speed of sound. But eventually exponential growth always comes to an end.” But IBM has been wrong several times before. In 1978, IBM scientists predicted Moore’s Law had only 10 years left. Whoops. In 1988, they again said it would end in 10 years. Ooops, again. Gordon Moore himself predicted his law would end when it reach 250 nanometer manufacturing, which it passed in 1997. Today the industry is aiming for 20 nanometers. In 2009, Intel CEO Craig Barrett said “We can scale it down another 10 to 15 years. Nothing touches the economics of it.”

Whether Moore’s Law — as the count of transistor density — has one, two, or three decades left to zoom and drive our economy, we can be sure it will peter out as other past trends have by being sublimated into another rising trend. When we reach the limits of miniaturization, and can no longer cram more circuits on one chip, we can just make the chip bigger (that’s Moore’s suggestion!). Carl Anderson of IBM cites three next-generation technologies that are candidates for the next round of exponential growth: piling transistors on top of each other (known as 3-D chips), optical computers, or making existing circuits work faster (accelerators). And then there is parallel processing using many core processors at once, lots of chips connected in parallel. In other words, maybe we don’t need more and more transistors on one chip. Maybe we need re-arrangements of the bits we have. We may consider ourselves to be a million times cleverer than a monkey, but we don’t have a million times as many genes, or a million times as many neurons. Our gene and neuron count is almost identical with all apes. The evolutionary growth in those trends stopped with Sapiens (us), and switched to increasing other factors. As Moore’s Law abates, we’ll find alternative solutions to making a million times more transistors. In fact, we may already have enough transistors per chip to do what we want, if only we knew how.

Moore began by measuring the number of “components” per square inch, then switched to transistors, and now we measure transistors per dollar. As one exponential trend (say, the density of transistors) decelerates, we begin caring about a new parameter (say, speed of operations, or number of connections) and so we begin measuring a new metric, and plotting a new graph. Suddenly, another “law” is revealed. As the character of this new technique is studied, exploited and optimized, its natural pace is revealed, and when this trajectory is extrapolated, it becomes the creators’ goal. In the case of computing, this newly realized attribute of microprocessors will become, over time, the new Moore’s Law.

Like the Air Force’s 1953 graph of top-speed, the curve is one way the technium speaks to us. Carver Mead, who barnstormed the country waving plots of Moore’s Law, believes we need to “listen to the technology.” As one curve inevitably flattens out, its momentum is taken up by anther S-curve. If we inspect any enduring curve closely we can see how definitions and metrics shift over time to accommodate new substitute technologies.

For instance, a close scrutiny of Kryder’s Law in hard disk densities shows that it is composed of a sequence of overlapping smaller trendlines. These may have slightly different slopes, but in aggregate, calibrated with an appropriate common metric, they yield the unwavering trajectory.

This cartoon graph dissects what is happening. A stack of s-curves, each one containing their own limited run of exponential growth, overlap to produce a long-run emergent exponential growth line. The trend bridges more than one technology, giving it a transcendent power. As one exponential boom is subsumed into the next boom, an established technology relays its momentum to the next paradigm, and carries forward an unrelenting growth. The performance is measure at a higher emergent level, not seen at first in the specific technologies. It reveals itself as a long-term trans-technium benefit, a macro trend that continues indefinitely. In this way Moore’s Law — redefined — will never end.

But while the slow demise of the transistor trend is inevitable, if the larger meta version of all the related Moore’s Laws — increasing, cheaper computer/internet power — were to suddenly cease on Earth in the next few years, it would be disastrous. These performance ratios roughly double (or half) 50% annually. That means things we care about get better by half as much every year. Imagine if you got half-again smarter every year, or remember half as much more this year as last. Embedded deep in the technium (as we no know it) is the remarkable capacity of half-again annual improvement. The optimism of our age rests on the reliable advance of Moore’s promise: that stuff will get significantly, seriously, desirably better and cheaper tomorrow. If the things we make will get better the next time, that means that the Golden Age is ahead of us, and not in the past. With the meta Moore’s Law out of action, half or more of the optimism of our time would vanish.

But even if it we wanted to, what on earth could derail Moore’s law? Suppose we were part of a vast conspiracy to halt Moore’s Law. Maybe we believed it artificially elevates undue optimism and encourages misguided expectations of a Singularity. What could we do? How would you stop it? Those who believe its powers rests primarily in its self-reinforced expectations would say: simply announce it will end. If enough smart believers circulate declaring Moore’s Law is over, then it will be over. The loop of self-fulfilling prophecy would be broken. But all it takes in one maverick to push ahead and make further progress, and the spell would be broken. The race would resume until the physics of scaling down gave out.

More clever folk might reason that since the economic regime as a whole determines the slope of Moore’s Law, you could keep decreasing the quality of the economy until it stopped. Perhaps through armed revolution, you installed an authoritative command-style policy (like the old state-communisms), whose lackadaisical economic growth killed the infrastructure for exponential increases in computing power. I find that possibility intriguing, but I have my doubts. If, in a counterfactual history, communism had won the cold war, and microelectronics were invented in a global Soviet style society, my guess is that even that alternative policy could not stifle Moore’s Law. Progress might roll out slower, but I don’t doubt Stalinist scientists would tap into the law of the microcosm, and soon marvel at the same technical wonder we do: chips improving exponentially as constant linear effort is applied.

I suspect Moore’s Law is something we don’t have much sway over, other than its doubling period. Moore’s Law is the Moira of our age. In Greek mythology the Moira were the three Fates. Usually depicted as dour spinsters, one Moira spun the thread of a newborn’s life. The other Moira counted out the thread’s length. And the third Moira cut the thread at death. A person’s beginning and end were predetermined. But what happened in between was not inevitable. Humans and gods could work within the confines of one’s ultimate destiny. According to legend, Dionysos, the god of wine and parties, was unable to cry. But he loved a woodspirit, a half-satyr named Ampelos, who was killed by a wild bull. Dionysos was so stricken by Ampelos’ death that he finally wept. So to appease Dionysos’ unexpected grief, the Moira transformed Ampelos into the first grapevine. Then according to the bards, “the inflexible threads of Moira were unloosened and turned back.” Ampelos’ blood became the wine that Dionysos loved. Fate was obeyed, yet Dionysos get what he choose. Within the inexorable flow of larger trends our freewill flits, moving us in tandem with destiny.

The unbending trajectories uncovered by Moore, Kryder, Gilder, and Kurzweil spin through the technium forming a long thread. The thrust of the thread is inevitable, its course destined by the nature of matter and discovery. Once untied, the thread of Moore’s Law will unravel steadily, inexorably towards its anchor at the bottom of physics. Along the way it unleashes other threads of technology we might wish to pull. Each of those threads, of Communication, Bandwidth, Storage, will unravel in its predetermined manner as well. We choose how fast to unzip them, and which ones to unloosen next. Collectively we push and pull with exceeding energy to wrench the threads from their place, but our efforts only serve to unravel it as it would anyway. The technium holds our Moira, and the Moira play out our inflexible threads. Like Dionysos we can unloosen, but not remove them.

Listen to the technology, Carver Mead says. What do the curves say? Imagine it is 1965. You’ve seen the curves Gordon Moore discovered. What if you believed the story they were trying to tell us: that each year, as sure as winter follows summer, and day follows night, computers would get half again better, and half again smaller, and half again cheaper, year after year, and that in 5 decades they would be 30 million times more powerful than they were then, and cheap. If you were sure of that back then, or even mostly persuaded, and if a lot of others were as well, what good fortune you could have harvested. You would have needed no other prophecies, no other predictions, no other details. Just knowing that single trajectory of Moore’s, and none other, we would have educated differently, invested differently, prepared more wisely to grasp the amazing powers it would sprout.

Moore’s Law is one of the few Moira threads we’ve teased out in our short history in the technium. There must be others. Most of the technium’s predetermined developments remain hidden, not yet uncovered, by tools not yet invented. But we’ve learned to look for them. Searching, we can see similar laws peeking out now. These “laws” are reflexes of the technium that kick in regardless of the social climate. They too will spawn progress, and inspire new powers and new desires as they unroll in ordered sequence. Perhaps these self-governing dynamics will appear in genetics, or in pharmaceuticals, or in cognition. Once a dynamic like Moore’s Law is launched and made visible, the fuels of finance, competition, and markets will push the law to its limits and keep it riding along that curve until it has consumed its physical potential.

Our choice, and it is significant, is to prepare for the gift — and the problems it will also bring. We can choose to get better at anticipating these inevitable surges. We can choose to educate ourselves and children to become smartly literate and wise in their use. And we can choose to modify our legal/political/economic assumptions to meet the ordained evolution ahead. But we cannot escape from them.

When we spy our technological fate in the distance we should not reel back in horror of its inevitability; rather we should lurch forward in preparation.